Introduction to agricolae

Felipe de Mendiburu1, Muhammad Yaseen2

2020-05-02

Source:vignettes/Intro_agricolae.Rmd

Intro_agricolae.Rmd

- Professor of the Academic Department of Statistics and Informatics of the Faculty of Economics and Planning.National University Agraria La Molina-PERU.

- Department of Mathematics and Statistics, University of Agriculture Faisalabad, Pakistan.

Preface

The following document was developed to facilitate the use of agricolae package in R, it is understood that the user knows the statistical methodology for the design and analysis of experiments and through the use of the functions programmed in agricolae facilitate the generation of the field book experimental design and their analysis. The first part document describes the use of graph.freq role is complementary to the hist function of R functions to facilitate the collection of statistics and frequency table, statistics or grouped data histogram based training grouped data and graphics as frequency polygon or ogive; second part is the development of experimental plans and numbering of the units as used in an agricultural experiment; a third part corresponding to the comparative tests and finally provides agricolae miscellaneous additional functions applied in agricultural research and stability functions, soil consistency, late blight simulation and others.

Introduction

The package agricolae offers a broad functionality in the design of experiments, especially for experiments in agriculture and improvements of plants, which can also be used for other purposes. It contains the following designs: lattice, alpha, cyclic, balanced incomplete block designs, complete randomized blocks, Latin, Graeco-Latin, augmented block designs, split plot and strip plot. It also has several procedures of experimental data analysis, such as the comparisons of treatments of Waller-Duncan, Bonferroni, Duncan, Student-Newman-Keuls, Scheffe, Ryan, Einot and Gabriel and Welsch multiple range test or the classic LSD and Tukey; and non-parametric comparisons, such as Kruskal-Wallis, Friedman, Durbin, Median and Waerden, stability analysis, and other procedures applied in genetics, as well as procedures in biodiversity and descriptive statistics, Mendiburu (2009)

Installation

The main program of R should be already installed in the platform of your computer (Windows, Linux or MAC). If it is not installed yet, you can download it from the R project https://www.r-project.org/ of a repository CRAN.

install.packages("agricolae")

Once the agricolae package is installed, it needs to be made accessible to the current R session by the command:

library(agricolae)

For online help facilities or the details of a particular command (such as the function waller.test) you can type:

For a complete functionality, agricolae requires other packages

MASS: |

for the generalized inverse used in the function PBIB.test

|

nlme: |

for the methods REML and LM in PBIB.test

|

klaR: |

for the function triplot used in the function AMMI

|

cluster: |

for the use of the function consensus

|

AlgDesign: |

for the balanced incomplete block design design.bib

|

Use in R

Since agricolae is a package of functions, these are operational when they are called directly from the console of R and are integrated to all the base functions of R. The following orders are frequent:

detach(package:agricolae) # detach package agricole library(agricolae) # Load the package to the memory designs<-apropos("design") print(designs[substr(designs,1,6)=="design"], row.names=FALSE)

[1] "design.ab" "design.alpha" "design.bib" "design.crd"

[5] "design.cyclic" "design.dau" "design.graeco" "design.lattice"

[9] "design.lsd" "design.rcbd" "design.split" "design.strip"

[13] "design.youden" For the use of symbols that do not appear in the keyboard in Spanish, such as:

~, [, ], &, ^, |. <, >, {, }, \% or others, use the table ASCII code.library(agricolae) # Load the package to the memory:

In order to continue with the command line, do not forget to close the open windows with any R order. For help:

Data set in agricolae

A<-as.data.frame(data(package="agricolae")$results[,3:4]) A[,2]<-paste(substr(A[,2],1,35),"..",sep=".") head(A)

Item Title

1 CIC Data for late blight of potatoes...

2 Chz2006 Data amendment Carhuaz 2006...

3 ComasOxapampa Data AUDPC Comas - Oxapampa...

4 DC Data for the analysis of carolina g...

5 Glycoalkaloids Data Glycoalkaloids...

6 Hco2006 Data amendment Huanuco 2006...Descriptive statistics

The package agricolae provides some complementary functions to the R program, specifically for the management of the histogram and function hist.

Histogram

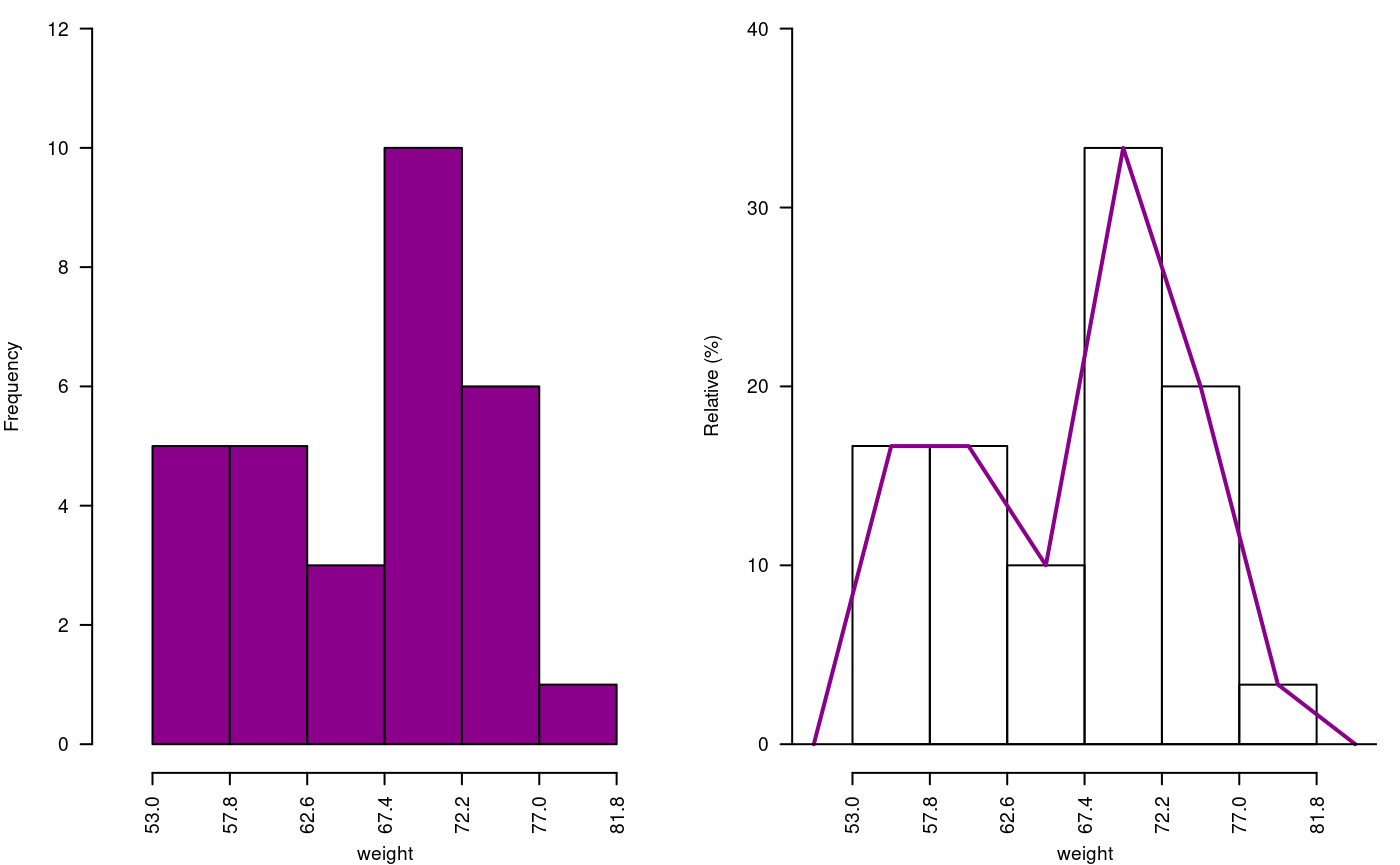

The histogram is constructed with the function graph.freq and is associated to other functions: polygon.freq, table.freq, stat.freq. See Figures: @ref(fig:DescriptStats2), @ref(fig:DescriptStats6) and @ref(fig:DescriptStats7) for more details.

Example. Data generated in R . (students’ weight).

weight<-c( 68, 53, 69.5, 55, 71, 63, 76.5, 65.5, 69, 75, 76, 57, 70.5, 71.5, 56, 81.5, 69, 59, 67.5, 61, 68, 59.5, 56.5, 73, 61, 72.5, 71.5, 59.5, 74.5, 63) print(summary(weight))

Min. 1st Qu. Median Mean 3rd Qu. Max.

53.00 59.88 68.00 66.45 71.50 81.50 oldpar<-par(mfrow=c(1,2),mar=c(4,4,0,1),cex=0.6) h1<- graph.freq(weight,col=colors()[84],frequency=1,las=2, ylim=c(0,12),ylab="Frequency") x<-h1$breaks h2<- plot(h1, frequency =2, axes= FALSE,ylim=c(0,0.4),xlab="weight",ylab="Relative (%)") polygon.freq(h2, col=colors()[84], lwd=2, frequency =2) axis(1,x,cex=0.6,las=2) y<-seq(0,0.4,0.1) axis(2, y,y*100,cex=0.6,las=1)

Absolute and relative frequency with polygon

par(oldpar)

Statistics and Frequency tables

Statistics: mean, median, mode and standard deviation of the grouped data.

stat.freq(h1)

$variance

[1] 51.37655

$mean

[1] 66.6

$median

[1] 68.36

$mode

[- -] mode

[1,] 67.4 72.2 70.45455Frequency tables: Use table.freq, stat.freq and summary

The table.freq is equal to summary()

Limits class: Lower and Upper

Class point: Main

Frequency: Frequency

Percentage frequency: Percentage

Cumulative frequency: CF

Cumulative percentage frequency: CPF

Lower Upper Main Frequency Percentage CF CPF

53.0 57.8 55.4 5 16.7 5 16.7

57.8 62.6 60.2 5 16.7 10 33.3

62.6 67.4 65.0 3 10.0 13 43.3

67.4 72.2 69.8 10 33.3 23 76.7

72.2 77.0 74.6 6 20.0 29 96.7

77.0 81.8 79.4 1 3.3 30 100.0Histogram manipulation functions

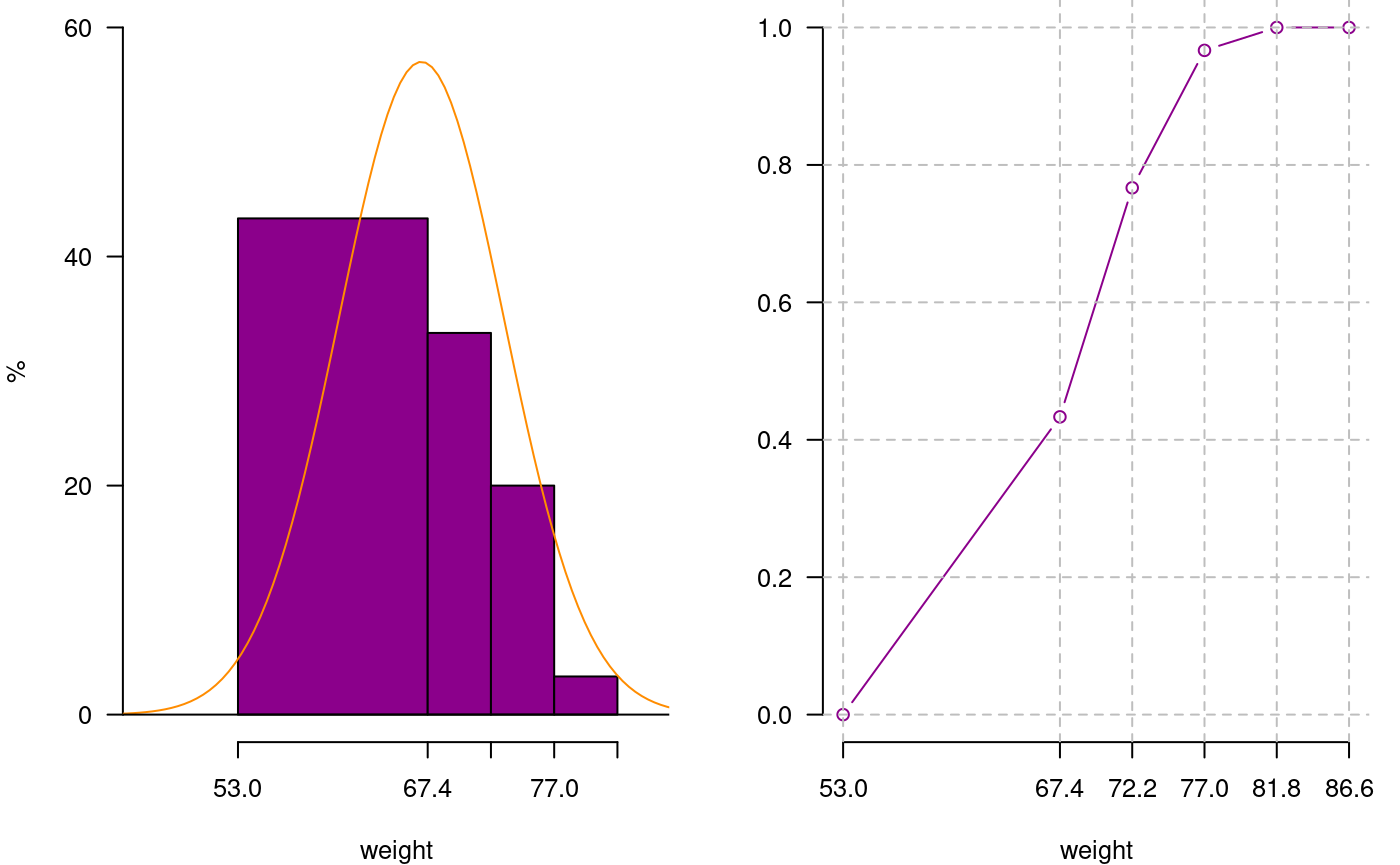

You can extract information from a histogram such as class intervals intervals.freq, attract new intervals with the sturges.freq function or to join classes with join.freq function. It is also possible to reproduce the graph with the same creator graph.freq or function plot and overlay normal function with normal.freq be it a histogram in absolute scale, relative or density . The following examples illustrates these properties.

sturges.freq(weight)

$maximum

[1] 81.5

$minimum

[1] 53

$amplitude

[1] 29

$classes

[1] 6

$interval

[1] 4.8

$breaks

[1] 53.0 57.8 62.6 67.4 72.2 77.0 81.8intervals.freq(h1)

lower upper

[1,] 53.0 57.8

[2,] 57.8 62.6

[3,] 62.6 67.4

[4,] 67.4 72.2

[5,] 72.2 77.0

[6,] 77.0 81.8 Lower Upper Main Frequency Percentage CF CPF

1 53.0 67.4 60.2 13 43.3 13 43.3

2 67.4 72.2 69.8 10 33.3 23 76.7

3 72.2 77.0 74.6 6 20.0 29 96.7

4 77.0 81.8 79.4 1 3.3 30 100.0oldpar<-par(mfrow=c(1,2),mar=c(4,4,0,1),cex=0.8) plot(h3, frequency=2,col=colors()[84],ylim=c(0,0.6),axes=FALSE,xlab="weight",ylab="%") y<-seq(0,0.6,0.2) axis(2,y,y*100,las=2) axis(1,h3$breaks) normal.freq(h3,frequency=2,col=colors()[90]) ogive.freq(h3,col=colors()[84],xlab="weight")

Join frequency and relative frequency with normal and Ogive

weight RCF

1 53.0 0.0000

2 67.4 0.4333

3 72.2 0.7667

4 77.0 0.9667

5 81.8 1.0000

6 86.6 1.0000par(oldpar)

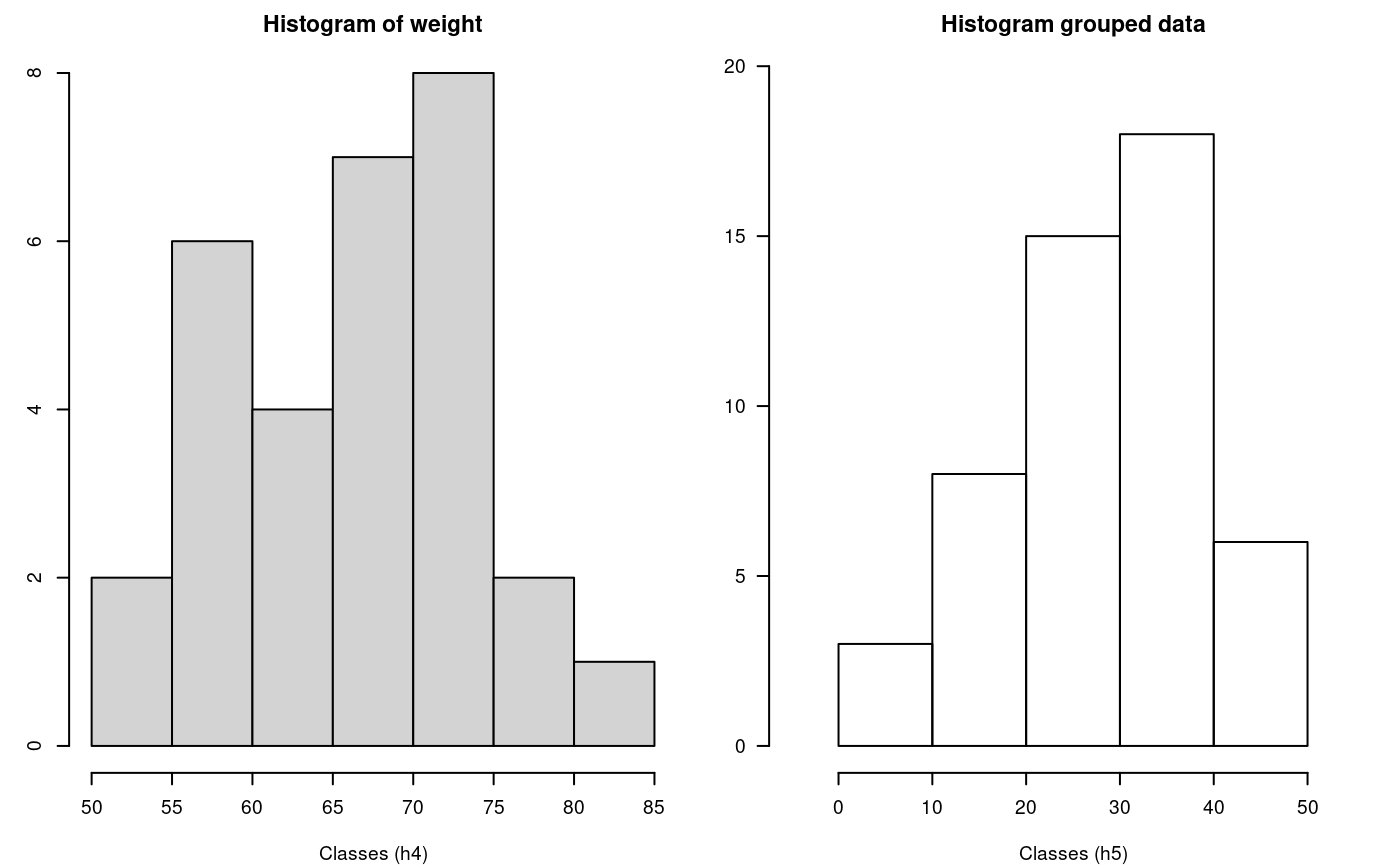

hist() and graph.freq() based on grouped data

The hist and graph.freq have the same characteristics, only f2 allows build histogram from grouped data.

0-10 (3)

10-20 (8)

20-30 (15)

30-40 (18)

40-50 (6)

oldpar<-par(mfrow=c(1,2),mar=c(4,3,2,1),cex=0.6) h4<-hist(weight,xlab="Classes (h4)") table.freq(h4) # this is possible # hh<-graph.freq(h4,plot=FALSE) # summary(hh) # new class classes <- c(0, 10, 20, 30, 40, 50) freq <- c(3, 8, 15, 18, 6) h5 <- graph.freq(classes,counts=freq, xlab="Classes (h5)",main="Histogram grouped data")

hist() function and histogram defined class

par(oldpar)

Lower Upper Main Frequency Percentage CF CPF

0 10 5 3 6 3 6

10 20 15 8 16 11 22

20 30 25 15 30 26 52

30 40 35 18 36 44 88

40 50 45 6 12 50 100Experimental Designs

The package agricolae presents special functions for the creation of the field book for experimental designs. Due to the random generation, this package is quite used in agricultural research.

For this generation, certain parameters are required, as for example the name of each treatment, the number of repetitions, and others, according to the design (Le Clerg et al., 1962; Cochran and Cox, 1992; Kuehl, 2000; Montgomery, 2002). There are other parameters of random generation, as the seed to reproduce the same random generation or the generation method (See the reference manual of agricolae).

Important parameters in the generation of design:

| series: | A constant that is used to set numerical tag blocks , eg number = 2, the labels will be : 101, 102, for the first row or block, 201, 202, for the following , in the case of completely randomized design, the numbering is sequencial. |

| design: | Some features of the design requested agricolae be applied specifically to design.ab(factorial) or design.split (split plot) and their possible values are: “rcbd”, “crd” and “lsd”. |

| seed: | The seed for the random generation and its value is any real value, if the value is zero, it has no reproducible generation, in this case copy of value of the outdesign$parameters. |

| kinds: | the random generation method, by default “Super-Duper”. |

| first: | For some designs is not required random the first repetition, especially in the block design, if you want to switch to random, change to TRUE. |

| randomization: | TRUE or FALSE. If false, randomization is not performed |

Output design:

| parameters: | the input to generation design, include the seed to generation random, if seed=0, the program generate one value and it is possible reproduce the design. |

| book: | field book |

| statistics: | the information statistics the design for example efficiency index, number of treatments. |

| sketch: | distribution of treatments in the field. |

| The enumeration of the plots | zigzag is a function that allows you to place the numbering of the plots in the direction of serpentine: The zigzag is output generated by one design: blocks, Latin square, graeco, split plot, strip plot, into blocks factorial, balanced incomplete block, cyclic lattice, alpha and augmented blocks. |

| fieldbook: | output zigzag, contain field book. |

Completely Randomized Design (CRD)

It generates completely a randomized design with equal or different repetition. “Random” uses the methods of number generation in R.The seed is by set.seed(seed, kinds). They only require the names of the treatments and the number of their repetitions and its parameters are:

str(design.crd)

function (trt, r, serie = 2, seed = 0, kinds = "Super-Duper", randomization = TRUE) trt <- c("A", "B", "C") repeticion <- c(4, 3, 4) outdesign <- design.crd(trt,r=repeticion,seed=777,serie=0) book1 <- outdesign$book head(book1)

plots r trt

1 1 1 C

2 2 1 A

3 3 1 B

4 4 2 A

5 5 3 A

6 6 2 CExcel:write.csv(book1,“book1.csv”,row.names=FALSE)

Randomized Complete Block Design (RCBD)

It generates field book and sketch to Randomized Complete Block Design. “Random” uses the methods of number generation in R.The seed is by set.seed(seed, kinds). They require the names of the treatments and the number of blocks and its parameters are:

str(design.rcbd)

function (trt, r, serie = 2, seed = 0, kinds = "Super-Duper", first = TRUE,

continue = FALSE, randomization = TRUE) trt <- c("A", "B", "C","D","E") repeticion <- 4 outdesign <- design.rcbd(trt,r=repeticion, seed=-513, serie=2) # book2 <- outdesign$book book2<- zigzag(outdesign) # zigzag numeration print(outdesign$sketch)

[,1] [,2] [,3] [,4] [,5]

[1,] "E" "B" "D" "A" "C"

[2,] "B" "A" "D" "C" "E"

[3,] "C" "E" "A" "B" "D"

[4,] "D" "C" "E" "B" "A" [,1] [,2] [,3] [,4] [,5]

[1,] 101 102 103 104 105

[2,] 205 204 203 202 201

[3,] 301 302 303 304 305

[4,] 405 404 403 402 401Latin Square Design

It generates Latin Square Design. “Random” uses the methods of number generation in R.The seed is by set.seed(seed, kinds). They require the names of the treatments and its parameters are:

str(design.lsd)

function (trt, serie = 2, seed = 0, kinds = "Super-Duper", first = TRUE,

randomization = TRUE) trt <- c("A", "B", "C", "D") outdesign <- design.lsd(trt, seed=543, serie=2) print(outdesign$sketch)

[,1] [,2] [,3] [,4]

[1,] "B" "C" "A" "D"

[2,] "D" "A" "C" "B"

[3,] "C" "D" "B" "A"

[4,] "A" "B" "D" "C" Graeco-Latin Designs

A graeco-latin square is a \(k \times k\) pattern that permits the study of \(k\) treatments simultaneously with three different blocking variables, each at \(k\) levels. The function is only for squares of the odd numbers and even numbers (4, 8, 10 and 12). They require the names of the treatments of each factor of study and its parameters are:

str(design.graeco)

function (trt1, trt2, serie = 2, seed = 0, kinds = "Super-Duper", randomization = TRUE) trt1 <- c("A", "B", "C", "D") trt2 <- 1:4 outdesign <- design.graeco(trt1,trt2, seed=543, serie=2) print(outdesign$sketch)

[,1] [,2] [,3] [,4]

[1,] "NA 2" "NA 4" "NA 3" "NA 1"

[2,] "NA 3" "NA 1" "NA 2" "NA 4"

[3,] "NA 1" "NA 3" "NA 4" "NA 2"

[4,] "NA 4" "NA 2" "NA 1" "NA 3"Youden Square Design

Such designs are referred to as Youden squares since they were introduced by Youden (1937) after Yates (1936) considered the special case of column equal to number treatment minus 1. “Random” uses the methods of number generation in R. The seed is by set.seed(seed, kinds). They require the names of the treatments of each factor of study and its parameters are:

str(design.youden)

function (trt, r, serie = 2, seed = 0, kinds = "Super-Duper", first = TRUE,

randomization = TRUE) varieties<-c("perricholi","yungay","maria bonita","tomasa") r<-3 outdesign <-design.youden(varieties,r,serie=2,seed=23) print(outdesign$sketch)

[,1] [,2] [,3]

[1,] "maria bonita" "tomasa" "perricholi"

[2,] "yungay" "maria bonita" "tomasa"

[3,] "perricholi" "yungay" "maria bonita"

[4,] "tomasa" "perricholi" "yungay" book <- outdesign$book print(book) # field book.

plots row col varieties

1 101 1 1 maria bonita

2 102 1 2 tomasa

3 103 1 3 perricholi

4 201 2 1 yungay

5 202 2 2 maria bonita

6 203 2 3 tomasa

7 301 3 1 perricholi

8 302 3 2 yungay

9 303 3 3 maria bonita

10 401 4 1 tomasa

11 402 4 2 perricholi

12 403 4 3 yungayprint(matrix(as.numeric(book[,1]),byrow = TRUE, ncol = r))

[,1] [,2] [,3]

[1,] 101 102 103

[2,] 201 202 203

[3,] 301 302 303

[4,] 401 402 403Serpentine enumeration

book <- zigzag(outdesign) print(matrix(as.numeric(book[,1]),byrow = TRUE, ncol = r))

[,1] [,2] [,3]

[1,] 101 102 103

[2,] 203 202 201

[3,] 301 302 303

[4,] 403 402 401Balanced Incomplete Block Designs (BIBD)

Creates Randomized Balanced Incomplete Block Design. “Random” uses the methods of number generation in R. The seed is by set.seed(seed, kinds). They require the names of the treatments and the size of the block and its parameters are:

str(design.bib)

function (trt, k, r = NULL, serie = 2, seed = 0, kinds = "Super-Duper",

maxRep = 20, randomization = TRUE) trt <- c("A", "B", "C", "D", "E" ) k <- 4 outdesign <- design.bib(trt,k, seed=543, serie=2)

Parameters BIB

==============

Lambda : 3

treatmeans : 5

Block size : 4

Blocks : 5

Replication: 4

Efficiency factor 0.9375

<<< Book >>>book5 <- outdesign$book outdesign$statistics

lambda treatmeans blockSize blocks r Efficiency

values 3 5 4 5 4 0.9375outdesign$parameters

$design

[1] "bib"

$trt

[1] "A" "B" "C" "D" "E"

$k

[1] 4

$serie

[1] 2

$seed

[1] 543

$kinds

[1] "Super-Duper"According to the produced information, they are five blocks of size 4, being the matrix:

outdesign$sketch

[,1] [,2] [,3] [,4]

[1,] "B" "C" "E" "A"

[2,] "C" "D" "B" "A"

[3,] "A" "D" "E" "B"

[4,] "E" "C" "D" "B"

[5,] "D" "C" "E" "A" It can be observed that the treatments have four repetitions. The parameter lambda has three repetitions, which means that a couple of treatments are together on three occasions. For example, B and E are found in the blocks I, II and V.

Cyclic Designs

They require the names of the treatments, the size of the block and the number of repetitions. This design is used for 6 to 30 treatments. The repetitions are a multiple of the size of the block; if they are six treatments and the size is 3, then the repetitions can be 6, 9, 12, etc. and its parameters are:

str(design.cyclic)

function (trt, k, r, serie = 2, rowcol = FALSE, seed = 0, kinds = "Super-Duper",

randomization = TRUE) trt <- c("A", "B", "C", "D", "E", "F" ) outdesign <- design.cyclic(trt,k=3, r=6, seed=543, serie=2)

cyclic design

Generator block basic:

1 2 4

1 3 2

Parameters

===================

treatmeans : 6

Block size : 3

Replication: 6 book6 <- outdesign$book outdesign$sketch[[1]]

[,1] [,2] [,3]

[1,] "F" "D" "C"

[2,] "C" "B" "E"

[3,] "D" "E" "A"

[4,] "B" "E" "F"

[5,] "A" "F" "C"

[6,] "B" "A" "D" outdesign$sketch[[2]]

[,1] [,2] [,3]

[1,] "A" "F" "E"

[2,] "A" "C" "B"

[3,] "A" "F" "B"

[4,] "C" "D" "E"

[5,] "E" "D" "F"

[6,] "D" "C" "B" 12 blocks of 4 treatments each have been generated.

Serpentine enumeration

[,1] [,2] [,3]

[1,] 101 102 103

[2,] 106 105 104

[3,] 107 108 109

[4,] 112 111 110

[5,] 113 114 115

[6,] 118 117 116t(X[,,2])

[,1] [,2] [,3]

[1,] 201 202 203

[2,] 206 205 204

[3,] 207 208 209

[4,] 212 211 210

[5,] 213 214 215

[6,] 218 217 216Lattice Designs

SIMPLE and TRIPLE lattice designs. It randomizes treatments in \(k \times k\) lattice. They require a number of treatments of a perfect square; for example 9, 16, 25, 36, 49, etc. and its parameters are:

str(design.lattice)

function (trt, r = 3, serie = 2, seed = 0, kinds = "Super-Duper", randomization = TRUE) They can generate a simple lattice (2 rep.) or a triple lattice (3 rep.) generating a triple lattice design for 9 treatments \(3 \times 3\)

trt<-letters[1:9] outdesign <-design.lattice(trt, r = 3, serie = 2, seed = 33, kinds = "Super-Duper")

Lattice design, triple 3 x 3

Efficiency factor

(E ) 0.7272727

<<< Book >>>book7 <- outdesign$book outdesign$parameters

$design

[1] "lattice"

$type

[1] "triple"

$trt

[1] "a" "b" "c" "d" "e" "f" "g" "h" "i"

$r

[1] 3

$serie

[1] 2

$seed

[1] 33

$kinds

[1] "Super-Duper"outdesign$sketch

$rep1

[,1] [,2] [,3]

[1,] "g" "c" "a"

[2,] "f" "b" "h"

[3,] "i" "e" "d"

$rep2

[,1] [,2] [,3]

[1,] "g" "f" "i"

[2,] "a" "h" "d"

[3,] "c" "b" "e"

$rep3

[,1] [,2] [,3]

[1,] "g" "h" "e"

[2,] "c" "f" "d"

[3,] "a" "b" "i" head(book7)

plots r block trt

1 101 1 1 g

2 102 1 1 c

3 103 1 1 a

4 104 1 2 f

5 105 1 2 b

6 106 1 2 hAlpha Designs

Generates an alpha designs starting from the alpha design fixing under the series formulated by Patterson and Williams. These designs are generated by the alpha arrangements. They are similar to the lattice designs, but the tables are rectangular \(s\) by \(k\) (with \(s\) blocks and \(k<s\) columns. The number of treatments should be equal to \(s \times k\) and all the experimental units \(r \times s \times k\) (\(r\) replications) and its parameters are:

str(design.alpha)

function (trt, k, r, serie = 2, seed = 0, kinds = "Super-Duper", randomization = TRUE) trt <- letters[1:15] outdesign <- design.alpha(trt,k=3,r=2,seed=543)

Alpha Design (0,1) - Serie I

Parameters Alpha Design

=======================

Treatmeans : 15

Block size : 3

Blocks : 5

Replication: 2

Efficiency factor

(E ) 0.6363636

<<< Book >>>book8 <- outdesign$book outdesign$statistics

treatments blocks Efficiency

values 15 5 0.6363636outdesign$sketch

$rep1

[,1] [,2] [,3]

[1,] "i" "g" "m"

[2,] "f" "o" "h"

[3,] "n" "j" "b"

[4,] "a" "c" "k"

[5,] "e" "l" "d"

$rep2

[,1] [,2] [,3]

[1,] "g" "f" "k"

[2,] "e" "j" "a"

[3,] "m" "c" "l"

[4,] "n" "d" "o"

[5,] "i" "h" "b" [,1] [,2] [,3]

[1,] 101 102 103

[2,] 104 105 106

[3,] 107 108 109

[4,] 110 111 112

[5,] 113 114 115t(A[,,2])

[,1] [,2] [,3]

[1,] 201 202 203

[2,] 204 205 206

[3,] 207 208 209

[4,] 210 211 212

[5,] 213 214 215Augmented Block Designs

These are designs for two types of treatments: the control treatments (common) and the increased treatments. The common treatments are applied in complete randomized blocks, and the increased treatments, at random. Each treatment should be applied in any block once only. It is understood that the common treatments are of a greater interest; the standard error of the difference is much smaller than when between two increased ones in different blocks. The function design.dau() achieves this purpose and its parameters are:

str(design.dau)

function (trt1, trt2, r, serie = 2, seed = 0, kinds = "Super-Duper", name = "trt",

randomization = TRUE) rm(list=ls()) trt1 <- c("A", "B", "C", "D") trt2 <- c("t","u","v","w","x","y","z") outdesign <- design.dau(trt1, trt2, r=5, seed=543, serie=2) book9 <- outdesign$book with(book9,by(trt, block,as.character))

block: 1

[1] "C" "B" "v" "D" "t" "A"

------------------------------------------------------------

block: 2

[1] "D" "u" "A" "B" "x" "C"

------------------------------------------------------------

block: 3

[1] "B" "y" "C" "A" "D"

------------------------------------------------------------

block: 4

[1] "A" "B" "C" "D" "w"

------------------------------------------------------------

block: 5

[1] "z" "A" "C" "D" "B"Serpentine enumeration

block: 1

[1] "101" "102" "103" "104" "105" "106"

------------------------------------------------------------

block: 2

[1] "206" "205" "204" "203" "202" "201"

------------------------------------------------------------

block: 3

[1] "301" "302" "303" "304" "305"

------------------------------------------------------------

block: 4

[1] "405" "404" "403" "402" "401"

------------------------------------------------------------

block: 5

[1] "501" "502" "503" "504" "505"head(book)

plots block trt

1 101 1 C

2 102 1 B

3 103 1 v

4 104 1 D

5 105 1 t

6 106 1 AFor augmented ompletely randomized design, use the function design.crd().

Split Plot Designs

These designs have two factors, one is applied in plots and is defined as trt1 in a randomized complete block design; and a second factor as trt2 , which is applied in the subplots of each plot applied at random. The function design.split() permits to find the experimental plan for this design and its parameters are:

str(design.split)

function (trt1, trt2, r = NULL, design = c("rcbd", "crd", "lsd"), serie = 2,

seed = 0, kinds = "Super-Duper", first = TRUE, randomization = TRUE) Aplication

trt1<-c("A","B","C","D") trt2<-c("a","b","c") outdesign <-design.split(trt1,trt2,r=3,serie=2,seed=543) book10 <- outdesign$book head(book10)

plots splots block trt1 trt2

1 101 1 1 D b

2 101 2 1 D a

3 101 3 1 D c

4 102 1 1 B a

5 102 2 1 B b

6 102 3 1 B cStrip-Plot Designs

These designs are used when there are two types of treatments (factors) and are applied separately in large plots, called bands, in a vertical and horizontal direction of the block, obtaining the divided blocks. Each block constitutes a repetition and its parameters are:

str(design.strip)

function (trt1, trt2, r, serie = 2, seed = 0, kinds = "Super-Duper", randomization = TRUE) Aplication

trt1<-c("A","B","C","D") trt2<-c("a","b","c") outdesign <-design.strip(trt1,trt2,r=3,serie=2,seed=543) book11 <- outdesign$book head(book11)

plots block trt1 trt2

1 101 1 D b

2 102 1 D a

3 103 1 D c

4 104 1 B b

5 105 1 B a

6 106 1 B ct3<-paste(book11$trt1, book11$trt2) B1<-t(matrix(t3[1:12],c(4,3))) B2<-t(matrix(t3[13:24],c(3,4))) B3<-t(matrix(t3[25:36],c(3,4))) print(B1)

[,1] [,2] [,3] [,4]

[1,] "D b" "D a" "D c" "B b"

[2,] "B a" "B c" "A b" "A a"

[3,] "A c" "C b" "C a" "C c"print(B2)

[,1] [,2] [,3]

[1,] "C b" "C a" "C c"

[2,] "B b" "B a" "B c"

[3,] "A b" "A a" "A c"

[4,] "D b" "D a" "D c"print(B3)

[,1] [,2] [,3]

[1,] "A c" "A b" "A a"

[2,] "B c" "B b" "B a"

[3,] "D c" "D b" "D a"

[4,] "C c" "C b" "C a"Serpentine enumeration

plots block trt1 trt2

1 101 1 D b

2 102 1 D a

3 103 1 D c

4 106 1 B b

5 105 1 B a

6 104 1 B c [,1] [,2] [,3]

[1,] 101 102 103

[2,] 106 105 104

[3,] 107 108 109

[4,] 112 111 110t(X[,,2])

[,1] [,2] [,3]

[1,] 201 202 203

[2,] 206 205 204

[3,] 207 208 209

[4,] 212 211 210t(X[,,3])

[,1] [,2] [,3]

[1,] 301 302 303

[2,] 306 305 304

[3,] 307 308 309

[4,] 312 311 310Factorial

The full factorial of n factors applied to an experimental design (CRD, RCBD and LSD) is common and this procedure in agricolae applies the factorial to one of these three designs and its parameters are:

str(design.ab)

function (trt, r = NULL, serie = 2, design = c("rcbd", "crd", "lsd"), seed = 0,

kinds = "Super-Duper", first = TRUE, randomization = TRUE) To generate the factorial, you need to create a vector of levels of each factor, the method automatically generates up to 25 factors and \(r\) repetitions.

trt <- c (4,2,3) # three factors with 4,2 and 3 levels.

to crd and rcbd designs, it is necessary to value \(r\) as the number of repetitions, this can be a vector if unequal to equal or constant repetition (recommended).

trt<-c(3,2) # factorial 3x2 outdesign <-design.ab(trt, r=3, serie=2) book12 <- outdesign$book head(book12) # print of the field book

plots block A B

1 101 1 2 2

2 102 1 2 1

3 103 1 3 2

4 104 1 1 2

5 105 1 1 1

6 106 1 3 1Serpentine enumeration

plots block A B

1 101 1 2 2

2 102 1 2 1

3 103 1 3 2

4 104 1 1 2

5 105 1 1 1

6 106 1 3 1factorial \(2 \times 2 \times 2\) with 5 replications in completely randomized design.

[1] "parameters" "book" crd$parameters

$design

[1] "factorial"

$trt

[1] "1 1 1" "1 1 2" "1 2 1" "1 2 2" "2 1 1" "2 1 2" "2 2 1" "2 2 2"

$r

[1] 5 5 5 5 5 5 5 5

$serie

[1] 2

$seed

[1] 1923434691

$kinds

[1] "Super-Duper"

[[7]]

[1] TRUE

$applied

[1] "crd"head(crd$book)

plots r A B C

1 101 1 2 2 2

2 102 2 2 2 2

3 103 1 2 1 1

4 104 1 1 2 1

5 105 1 1 1 1

6 106 2 1 2 1Multiple Comparisons

For the analyses, the following functions of agricolae are used: LSD.test, HSD.test, duncan.test, scheffe.test, waller.test, SNK.test, REGW.test (Hsu, 1996; Steel et al., 1997) and durbin.test, kruskal, friedman, waerden.test and Median.test (Conover, 1999).

For every statistical analysis, the data should be organized in columns. For the demonstration, the agricolae database will be used.

The sweetpotato data correspond to a completely random experiment in field with plots of 50 sweet potato plants, subjected to the virus effect and to a control without virus (See the reference manual of the package).

[1] 17.1666[1] 27.625Model parameters: Degrees of freedom and variance of the error:

df<-df.residual(model) MSerror<-deviance(model)/df

The Least Significant Difference (LSD)

It includes the multiple comparison through the method of the minimum significant difference (Least Significant Difference), (Steel et al., 1997).

# comparison <- LSD.test(yield,virus,df,MSerror) LSD.test(model, "virus",console=TRUE)

Study: model ~ "virus"

LSD t Test for yield

Mean Square Error: 22.48917

virus, means and individual ( 95 %) CI

yield std r LCL UCL Min Max

cc 24.40000 3.609709 3 18.086268 30.71373 21.7 28.5

fc 12.86667 2.159475 3 6.552935 19.18040 10.6 14.9

ff 36.33333 7.333030 3 30.019601 42.64707 28.0 41.8

oo 36.90000 4.300000 3 30.586268 43.21373 32.1 40.4

Alpha: 0.05 ; DF Error: 8

Critical Value of t: 2.306004

least Significant Difference: 8.928965

Treatments with the same letter are not significantly different.

yield groups

oo 36.90000 a

ff 36.33333 a

cc 24.40000 b

fc 12.86667 cIn the function LSD.test, the multiple comparison was carried out. In order to obtain the probabilities of the comparisons, it should be indicated that groups are not required; thus:

# comparison <- LSD.test(yield, virus,df, MSerror, group=FALSE) outLSD <-LSD.test(model, "virus", group=FALSE,console=TRUE)

Study: model ~ "virus"

LSD t Test for yield

Mean Square Error: 22.48917

virus, means and individual ( 95 %) CI

yield std r LCL UCL Min Max

cc 24.40000 3.609709 3 18.086268 30.71373 21.7 28.5

fc 12.86667 2.159475 3 6.552935 19.18040 10.6 14.9

ff 36.33333 7.333030 3 30.019601 42.64707 28.0 41.8

oo 36.90000 4.300000 3 30.586268 43.21373 32.1 40.4

Alpha: 0.05 ; DF Error: 8

Critical Value of t: 2.306004

Comparison between treatments means

difference pvalue signif. LCL UCL

cc - fc 11.5333333 0.0176 * 2.604368 20.462299

cc - ff -11.9333333 0.0151 * -20.862299 -3.004368

cc - oo -12.5000000 0.0121 * -21.428965 -3.571035

fc - ff -23.4666667 0.0003 *** -32.395632 -14.537701

fc - oo -24.0333333 0.0003 *** -32.962299 -15.104368

ff - oo -0.5666667 0.8873 -9.495632 8.362299Signif. codes:

0 * 0.001 ** 0.01 * 0.05 . 0.1 ’ ’ 1**

$statistics

MSerror Df Mean CV t.value LSD

22 8 28 17 2.3 8.9

$parameters

test p.ajusted name.t ntr alpha

Fisher-LSD none virus 4 0.05

$means

yield std r LCL UCL Min Max Q25 Q50 Q75

cc 24 3.6 3 18.1 31 22 28 22 23 26

fc 13 2.2 3 6.6 19 11 15 12 13 14

ff 36 7.3 3 30.0 43 28 42 34 39 40

oo 37 4.3 3 30.6 43 32 40 35 38 39

$comparison

difference pvalue signif. LCL UCL

cc - fc 11.53 0.0176 * 2.6 20.5

cc - ff -11.93 0.0151 * -20.9 -3.0

cc - oo -12.50 0.0121 * -21.4 -3.6

fc - ff -23.47 0.0003 *** -32.4 -14.5

fc - oo -24.03 0.0003 *** -33.0 -15.1

ff - oo -0.57 0.8873 -9.5 8.4

$groups

NULL

attr(,"class")

[1] "group"holm, hommel, hochberg, bonferroni, BH, BY, fdr

With the function LSD.test we can make adjustments to the probabilities found, as for example the adjustment by Bonferroni, holm and other options see Adjust P-values for Multiple Comparisons, function ‘p.adjust(stats)’ (R Core Team, 2020).

LSD.test(model, "virus", group=FALSE, p.adj= "bon",console=TRUE)

Study: model ~ "virus"

LSD t Test for yield

P value adjustment method: bonferroni

Mean Square Error: 22

virus, means and individual ( 95 %) CI

yield std r LCL UCL Min Max

cc 24 3.6 3 18.1 31 22 28

fc 13 2.2 3 6.6 19 11 15

ff 36 7.3 3 30.0 43 28 42

oo 37 4.3 3 30.6 43 32 40

Alpha: 0.05 ; DF Error: 8

Critical Value of t: 3.5

Comparison between treatments means

difference pvalue signif. LCL UCL

cc - fc 11.53 0.1058 -1.9 25.00

cc - ff -11.93 0.0904 . -25.4 1.54

cc - oo -12.50 0.0725 . -26.0 0.97

fc - ff -23.47 0.0018 ** -36.9 -10.00

fc - oo -24.03 0.0015 ** -37.5 -10.56

ff - oo -0.57 1.0000 -14.0 12.90 yield groups

oo 37 a

ff 36 a

cc 24 b

fc 13 c difference pvalue signif.

cc - fc 11.53 0.0484 *

cc - ff -11.93 0.0484 *

cc - oo -12.50 0.0484 *

fc - ff -23.47 0.0015 **

fc - oo -24.03 0.0015 **

ff - oo -0.57 0.8873 Other comparison tests can be applied, such as duncan, Student-Newman-Keuls, tukey and waller-duncan

For Duncan, use the function duncan.test; for Student-Newman-Keuls, the function SNK.test; for Tukey, the function HSD.test; for Scheffe, the function scheffe.test and for Waller-Duncan, the function waller.test. The arguments are the same. Waller also requires the value of F-calculated of the ANOVA treatments. If the model is used as a parameter, this is no longer necessary.

Duncan’s New Multiple-Range Test

It corresponds to the Duncan’s Test (Steel et al., 1997).

duncan.test(model, "virus",console=TRUE)

Study: model ~ "virus"

Duncan's new multiple range test

for yield

Mean Square Error: 22

virus, means

yield std r Min Max

cc 24 3.6 3 22 28

fc 13 2.2 3 11 15

ff 36 7.3 3 28 42

oo 37 4.3 3 32 40

Alpha: 0.05 ; DF Error: 8

Critical Range

2 3 4

8.9 9.3 9.5

Means with the same letter are not significantly different.

yield groups

oo 37 a

ff 36 a

cc 24 b

fc 13 cStudent-Newman-Keuls

Student, Newman and Keuls helped to improve the Newman-Keuls test of 1939, which was known as the Keuls method (Steel et al., 1997).

# SNK.test(model, "virus", alpha=0.05,console=TRUE) SNK.test(model, "virus", group=FALSE,console=TRUE)

Study: model ~ "virus"

Student Newman Keuls Test

for yield

Mean Square Error: 22

virus, means

yield std r Min Max

cc 24 3.6 3 22 28

fc 13 2.2 3 11 15

ff 36 7.3 3 28 42

oo 37 4.3 3 32 40

Comparison between treatments means

difference pvalue signif. LCL UCL

cc - fc 11.53 0.0176 * 2.6 20.5

cc - ff -11.93 0.0151 * -20.9 -3.0

cc - oo -12.50 0.0291 * -23.6 -1.4

fc - ff -23.47 0.0008 *** -34.5 -12.4

fc - oo -24.03 0.0012 ** -36.4 -11.6

ff - oo -0.57 0.8873 -9.5 8.4Ryan, Einot and Gabriel and Welsch

Multiple range tests for all pairwise comparisons, to obtain a confident inequalities multiple range tests (Hsu, 1996).

# REGW.test(model, "virus", alpha=0.05,console=TRUE) REGW.test(model, "virus", group=FALSE,console=TRUE)

Study: model ~ "virus"

Ryan, Einot and Gabriel and Welsch multiple range test

for yield

Mean Square Error: 22

virus, means

yield std r Min Max

cc 24 3.6 3 22 28

fc 13 2.2 3 11 15

ff 36 7.3 3 28 42

oo 37 4.3 3 32 40

Comparison between treatments means

difference pvalue signif. LCL UCL

cc - fc 11.53 0.0350 * 0.91 22.16

cc - ff -11.93 0.0360 * -23.00 -0.87

cc - oo -12.50 0.0482 * -24.90 -0.10

fc - ff -23.47 0.0006 *** -34.09 -12.84

fc - oo -24.03 0.0007 *** -35.10 -12.97

ff - oo -0.57 0.9873 -11.19 10.06Tukey’s W Procedure (HSD)

This studentized range test, created by Tukey in 1953, is known as the Tukey’s HSD (Honestly Significant Differences) (Steel et al., 1997).

outHSD<- HSD.test(model, "virus",console=TRUE)

Study: model ~ "virus"

HSD Test for yield

Mean Square Error: 22

virus, means

yield std r Min Max

cc 24 3.6 3 22 28

fc 13 2.2 3 11 15

ff 36 7.3 3 28 42

oo 37 4.3 3 32 40

Alpha: 0.05 ; DF Error: 8

Critical Value of Studentized Range: 4.5

Minimun Significant Difference: 12

Treatments with the same letter are not significantly different.

yield groups

oo 37 a

ff 36 ab

cc 24 bc

fc 13 coutHSD$statistics

MSerror Df Mean CV MSD

22 8 28 17 12

$parameters

test name.t ntr StudentizedRange alpha

Tukey virus 4 4.5 0.05

$means

yield std r Min Max Q25 Q50 Q75

cc 24 3.6 3 22 28 22 23 26

fc 13 2.2 3 11 15 12 13 14

ff 36 7.3 3 28 42 34 39 40

oo 37 4.3 3 32 40 35 38 39

$comparison

NULL

$groups

yield groups

oo 37 a

ff 36 ab

cc 24 bc

fc 13 c

attr(,"class")

[1] "group"Tukey (HSD) for different repetition

Include the argument unbalanced = TRUE in the function. Valid for group = TRUE/FALSE

A<-sweetpotato[-c(4,5,7),] modelUnbalanced <- aov(yield ~ virus, data=A) outUn <-HSD.test(modelUnbalanced, "virus",group=FALSE, unbalanced = TRUE) print(outUn$comparison)

difference pvalue signif. LCL UCL

cc - fc 11.3 0.252 -8 30.6

cc - ff -9.2 0.386 -28 10.1

cc - oo -12.5 0.196 -32 6.8

fc - ff -20.5 0.040 * -40 -1.2

fc - oo -23.8 0.022 * -43 -4.5

ff - oo -3.3 0.917 -23 16.0 yield groups

oo 37 a

ff 34 a

cc 24 ab

fc 13 bIf this argument is not included, the probabilities of significance will not be consistent with the choice of groups.

Illustrative example of this inconsistency:

difference pvalue

cc - fc 11.3 0.317

cc - ff -9.2 0.297

cc - oo -12.5 0.096

fc - ff -20.5 0.071

fc - oo -23.8 0.033

ff - oo -3.3 0.885 yield groups

oo 37 a

ff 34 ab

cc 24 ab

fc 13 bWaller-Duncan’s Bayesian K-Ratio T-Test

Duncan continued the multiple comparison procedures, introducing the criterion of minimizing both experimental errors; for this, he used the Bayes’ theorem, obtaining one new test called Waller-Duncan (Waller and Duncan, 1969; Steel et al., 1997).

# variance analysis: anova(model)

Analysis of Variance Table

Response: yield

Df Sum Sq Mean Sq F value Pr(>F)

virus 3 1170 390 17.3 0.00073 ***

Residuals 8 180 22

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1with(sweetpotato,waller.test(yield,virus,df,MSerror,Fc= 17.345, group=FALSE,console=TRUE))

Study: yield ~ virus

Waller-Duncan K-ratio t Test for yield

This test minimizes the Bayes risk under additive loss and certain other assumptions

......

K ratio 100.0

Error Degrees of Freedom 8.0

Error Mean Square 22.5

F value 17.3

Critical Value of Waller 2.2

virus, means

yield std r Min Max

cc 24 3.6 3 22 28

fc 13 2.2 3 11 15

ff 36 7.3 3 28 42

oo 37 4.3 3 32 40

Comparison between treatments means

Difference significant

cc - fc 11.53 TRUE

cc - ff -11.93 TRUE

cc - oo -12.50 TRUE

fc - ff -23.47 TRUE

fc - oo -24.03 TRUE

ff - oo -0.57 FALSEIn another case with only invoking the model object:

outWaller <- waller.test(model, "virus", group=FALSE,console=FALSE)

The found object outWaller has information to make other procedures.

names(outWaller)

[1] "statistics" "parameters" "means" "comparison" "groups" print(outWaller$comparison)

Difference significant

cc - fc 11.53 TRUE

cc - ff -11.93 TRUE

cc - oo -12.50 TRUE

fc - ff -23.47 TRUE

fc - oo -24.03 TRUE

ff - oo -0.57 FALSEIt is indicated that the virus effect “ff” is not significant to the control “oo”.

outWaller$statistics

Mean Df CV MSerror F.Value Waller CriticalDifference

28 8 17 22 17 2.2 8.7Scheffe’s Test

This method, created by Scheffe in 1959, is very general for all the possible contrasts and their confidence intervals. The confidence intervals for the averages are very broad, resulting in a very conservative test for the comparison between treatment averages (Steel et al., 1997).

# analysis of variance: scheffe.test(model,"virus", group=TRUE,console=TRUE, main="Yield of sweetpotato\nDealt with different virus")

Study: Yield of sweetpotato

Dealt with different virus

Scheffe Test for yield

Mean Square Error : 22

virus, means

yield std r Min Max

cc 24 3.6 3 22 28

fc 13 2.2 3 11 15

ff 36 7.3 3 28 42

oo 37 4.3 3 32 40

Alpha: 0.05 ; DF Error: 8

Critical Value of F: 4.1

Minimum Significant Difference: 14

Means with the same letter are not significantly different.

yield groups

oo 37 a

ff 36 a

cc 24 ab

fc 13 bThe minimum significant value is very high. If you require the approximate probabilities of comparison, you can use the option group=FALSE.

outScheffe <- scheffe.test(model,"virus", group=FALSE, console=TRUE)

Study: model ~ "virus"

Scheffe Test for yield

Mean Square Error : 22

virus, means

yield std r Min Max

cc 24 3.6 3 22 28

fc 13 2.2 3 11 15

ff 36 7.3 3 28 42

oo 37 4.3 3 32 40

Alpha: 0.05 ; DF Error: 8

Critical Value of F: 4.1

Comparison between treatments means

Difference pvalue sig LCL UCL

cc - fc 11.53 0.0978 . -2 25.1

cc - ff -11.93 0.0855 . -25 1.6

cc - oo -12.50 0.0706 . -26 1.0

fc - ff -23.47 0.0023 ** -37 -9.9

fc - oo -24.03 0.0020 ** -38 -10.5

ff - oo -0.57 0.9991 -14 13.0Multiple comparison in factorial treatments

In a factorial combined effects of the treatments. Comparetive tests: LSD, HSD, Waller-Duncan, Duncan, Scheff\'e, SNK can be applied.

# modelABC <-aov (y ~ A * B * C, data)

# compare <-LSD.test (modelABC, c ("A", "B", "C"),console=TRUE)The comparison is the combination of A:B:C.

Data RCBD design with a factorial clone x nitrogen. The response variable yield.

yield <-scan (text = "6 7 9 13 16 20 8 8 9 7 8 8 12 17 18 10 9 12 9 9 9 14 18 21 11 12 11 8 10 10 15 16 22 9 9 9 " ) block <-gl (4, 9) clone <-rep (gl (3, 3, labels = c ("c1", "c2", "c3")), 4) nitrogen <-rep (gl (3, 1, labels = c ("n1", "n2", "n3")), 12) A <-data.frame (block, clone, nitrogen, yield) head (A)

block clone nitrogen yield

1 1 c1 n1 6

2 1 c1 n2 7

3 1 c1 n3 9

4 1 c2 n1 13

5 1 c2 n2 16

6 1 c2 n3 20outAOV <-aov (yield ~ block + clone * nitrogen, data = A)

anova (outAOV)

Analysis of Variance Table

Response: yield

Df Sum Sq Mean Sq F value Pr(>F)

block 3 21 6.9 5.82 0.00387 **

clone 2 498 248.9 209.57 6.4e-16 ***

nitrogen 2 54 27.0 22.76 2.9e-06 ***

clone:nitrogen 4 43 10.8 9.11 0.00013 ***

Residuals 24 29 1.2

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1outFactorial <-LSD.test (outAOV, c("clone", "nitrogen"), main = "Yield ~ block + nitrogen + clone + clone:nitrogen",console=TRUE)

Study: Yield ~ block + nitrogen + clone + clone:nitrogen

LSD t Test for yield

Mean Square Error: 1.2

clone:nitrogen, means and individual ( 95 %) CI

yield std r LCL UCL Min Max

c1:n1 7.5 1.29 4 6.4 8.6 6 9

c1:n2 8.5 1.29 4 7.4 9.6 7 10

c1:n3 9.0 0.82 4 7.9 10.1 8 10

c2:n1 13.5 1.29 4 12.4 14.6 12 15

c2:n2 16.8 0.96 4 15.6 17.9 16 18

c2:n3 20.2 1.71 4 19.1 21.4 18 22

c3:n1 9.5 1.29 4 8.4 10.6 8 11

c3:n2 9.5 1.73 4 8.4 10.6 8 12

c3:n3 10.2 1.50 4 9.1 11.4 9 12

Alpha: 0.05 ; DF Error: 24

Critical Value of t: 2.1

least Significant Difference: 1.6

Treatments with the same letter are not significantly different.

yield groups

c2:n3 20.2 a

c2:n2 16.8 b

c2:n1 13.5 c

c3:n3 10.2 d

c3:n1 9.5 de

c3:n2 9.5 de

c1:n3 9.0 def

c1:n2 8.5 ef

c1:n1 7.5 fAnalysis of Balanced Incomplete Blocks

This analysis can come from balanced or partially balanced designs. The function BIB.test is for balanced designs, and BIB.test, for partially balanced designs. In the following example, the agricolae data will be used (Joshi, 1987).

# Example linear estimation and design of experiments. (Joshi) # Institute of Social Sciences Agra, India # 6 varieties of wheat in 10 blocks of 3 plots each. block<-gl(10,3) variety<-c(1,2,3,1,2,4,1,3,5,1,4,6,1,5,6,2,3,6,2,4,5,2,5,6,3,4,5,3, 4,6) Y<-c(69,54,50,77,65,38,72,45,54,63,60,39,70,65,54,65,68,67,57,60,62, 59,65,63,75,62,61,59,55,56) head(cbind(block,variety,Y))

block variety Y

[1,] 1 1 69

[2,] 1 2 54

[3,] 1 3 50

[4,] 2 1 77

[5,] 2 2 65

[6,] 2 4 38BIB.test(block, variety, Y,console=TRUE)

ANALYSIS BIB: Y

Class level information

Block: 1 2 3 4 5 6 7 8 9 10

Trt : 1 2 3 4 5 6

Number of observations: 30

Analysis of Variance Table

Response: Y

Df Sum Sq Mean Sq F value Pr(>F)

block.unadj 9 467 51.9 0.90 0.547

trt.adj 5 1156 231.3 4.02 0.016 *

Residuals 15 863 57.5

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

coefficient of variation: 13 %

Y Means: 60

variety, statistics

Y mean.adj SE r std Min Max

1 70 75 3.7 5 5.1 63 77

2 60 59 3.7 5 4.9 54 65

3 59 59 3.7 5 12.4 45 75

4 55 55 3.7 5 9.8 38 62

5 61 60 3.7 5 4.5 54 65

6 56 54 3.7 5 10.8 39 67

LSD test

Std.diff : 5.4

Alpha : 0.05

LSD : 11

Parameters BIB

Lambda : 2

treatmeans : 6

Block size : 3

Blocks : 10

Replication: 5

Efficiency factor 0.8

<<< Book >>>

Comparison between treatments means

Difference pvalue sig.

1 - 2 16.42 0.0080 **

1 - 3 16.58 0.0074 **

1 - 4 20.17 0.0018 **

1 - 5 15.08 0.0132 *

1 - 6 20.75 0.0016 **

2 - 3 0.17 0.9756

2 - 4 3.75 0.4952

2 - 5 -1.33 0.8070

2 - 6 4.33 0.4318

3 - 4 3.58 0.5142

3 - 5 -1.50 0.7836

3 - 6 4.17 0.4492

4 - 5 -5.08 0.3582

4 - 6 0.58 0.9148

5 - 6 5.67 0.3074

Treatments with the same letter are not significantly different.

Y groups

1 75 a

5 60 b

2 59 b

3 59 b

4 55 b

6 54 bfunction (block, trt, Y, test = c(“lsd”, “tukey”, “duncan”, “waller”, “snk”), alpha = 0.05, group = TRUE) LSD, Tukey Duncan, Waller-Duncan and SNK, can be used. The probabilities of the comparison can also be obtained. It should only be indicated: group=FALSE, thus:

out <-BIB.test(block, trt=variety, Y, test="tukey", group=FALSE, console=TRUE)

ANALYSIS BIB: Y

Class level information

Block: 1 2 3 4 5 6 7 8 9 10

Trt : 1 2 3 4 5 6

Number of observations: 30

Analysis of Variance Table

Response: Y

Df Sum Sq Mean Sq F value Pr(>F)

block.unadj 9 467 51.9 0.90 0.547

trt.adj 5 1156 231.3 4.02 0.016 *

Residuals 15 863 57.5

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

coefficient of variation: 13 %

Y Means: 60

variety, statistics

Y mean.adj SE r std Min Max

1 70 75 3.7 5 5.1 63 77

2 60 59 3.7 5 4.9 54 65

3 59 59 3.7 5 12.4 45 75

4 55 55 3.7 5 9.8 38 62

5 61 60 3.7 5 4.5 54 65

6 56 54 3.7 5 10.8 39 67

Tukey

Alpha : 0.05

Std.err : 3.8

HSD : 17

Parameters BIB

Lambda : 2

treatmeans : 6

Block size : 3

Blocks : 10

Replication: 5

Efficiency factor 0.8

<<< Book >>>

Comparison between treatments means

Difference pvalue sig.

1 - 2 16.42 0.070 .

1 - 3 16.58 0.067 .

1 - 4 20.17 0.019 *

1 - 5 15.08 0.110

1 - 6 20.75 0.015 *

2 - 3 0.17 1.000

2 - 4 3.75 0.979

2 - 5 -1.33 1.000

2 - 6 4.33 0.962

3 - 4 3.58 0.983

3 - 5 -1.50 1.000

3 - 6 4.17 0.967

4 - 5 -5.08 0.927

4 - 6 0.58 1.000

5 - 6 5.67 0.891 names(out)

[1] "parameters" "statistics" "comparison" "means" "groups" rm(block,variety)

bar.group: out$groups

bar.err: out$means

Partially Balanced Incomplete Blocks

The function PBIB.test (Joshi, 1987), can be used for the lattice and alpha designs.

Consider the following case: Construct the alpha design with 30 treatments, 2 repetitions, and a block size equal to 3.

# alpha design Genotype<-paste("geno",1:30,sep="") r<-2 k<-3 plan<-design.alpha(Genotype,k,r,seed=5)

Alpha Design (0,1) - Serie I

Parameters Alpha Design

=======================

Treatmeans : 30

Block size : 3

Blocks : 10

Replication: 2

Efficiency factor

(E ) 0.62

<<< Book >>>The generated plan is plan$book. Suppose that the corresponding observation to each experimental unit is:

yield <-c(5,2,7,6,4,9,7,6,7,9,6,2,1,1,3,2,4,6,7,9,8,7,6,4,3,2,2,1,1, 2,1,1,2,4,5,6,7,8,6,5,4,3,1,1,2,5,4,2,7,6,6,5,6,4,5,7,6,5,5,4)

The data table is constructed for the analysis. In theory, it is presumed that a design is applied and the experiment is carried out; subsequently, the study variables are observed from each experimental unit.

data<-data.frame(plan$book,yield) # The analysis: modelPBIB <- with(data,PBIB.test(block, Genotype, replication, yield, k=3, group=TRUE, console=TRUE))

ANALYSIS PBIB: yield

Class level information

block : 20

Genotype : 30

Number of observations: 60

Estimation Method: Residual (restricted) maximum likelihood

Parameter Estimates

Variance

block:replication 3.8e+00

replication 6.1e-09

Residual 1.7e+00

Fit Statistics

AIC 214

BIC 260

-2 Res Log Likelihood -74

Analysis of Variance Table

Response: yield

Df Sum Sq Mean Sq F value Pr(>F)

Genotype 29 69.2 2.39 1.4 0.28

Residuals 11 18.7 1.70

Coefficient of variation: 29 %

yield Means: 4.5

Parameters PBIB

.

Genotype 30

block size 3

block/replication 10

replication 2

Efficiency factor 0.62

Comparison test lsd

Treatments with the same letter are not significantly different.

yield.adj groups

geno10 7.7 a

geno19 6.7 ab

geno1 6.7 ab

geno9 6.4 abc

geno18 6.1 abc

geno16 5.7 abcd

geno26 5.2 abcd

geno8 5.2 abcd

geno17 5.2 abcd

geno29 4.9 abcd

geno27 4.9 abcd

geno11 4.9 abcd

geno30 4.8 abcd

geno22 4.5 abcd

geno28 4.5 abcd

geno5 4.2 abcd

geno14 4.1 abcd

geno23 4.0 abcd

geno15 3.9 abcd

geno2 3.8 bcd

geno4 3.8 bcd

geno3 3.7 bcd

geno25 3.6 bcd

geno12 3.6 bcd

geno6 3.6 bcd

geno21 3.4 bcd

geno24 3.0 bcd

geno13 2.8 cd

geno20 2.7 cd

geno7 2.3 d

<<< to see the objects: means, comparison and groups. >>>The adjusted averages can be extracted from the modelPBIB.

head(modelPBIB$means)

The comparisons:

head(modelPBIB$comparison)

The data on the adjusted averages and their variation can be illustrated with the functions plot.group and bar.err. Since the created object is very similar to the objects generated by the multiple comparisons.

Analysis of balanced lattice 3x3, 9 treatments, 4 repetitions.

Create the data in a text file: latice3x3.txt and read with R:

trt<-c(1,8,5,5,2,9,2,7,3,6,4,9,4,6,9,8,7,6,1,5,8,3,2,7,3,7,2,1,3,4,6,4,9,5,8,1) yield<-c(48.76,10.83,12.54,11.07,22,47.43,27.67,30,13.78,37,42.37,39,14.46,30.69,42.01, 22,42.8,28.28,50,24,24,15.42,30,23.8,19.68,31,23,41,12.9,49.95,25,45.57,30,20,18,43.81) sqr<-rep(gl(4,3),3) block<-rep(1:12,3) modelLattice<-PBIB.test(block,trt,sqr,yield,k=3,console=TRUE, method="VC")

ANALYSIS PBIB: yield

Class level information

block : 12

trt : 9

Number of observations: 36

Estimation Method: Variances component model

Fit Statistics

AIC 265

BIC 298

Analysis of Variance Table

Response: yield

Df Sum Sq Mean Sq F value Pr(>F)

sqr 3 133 44 0.69 0.57361

trt.unadj 8 3749 469 7.24 0.00042 ***

block/sqr 8 368 46 0.71 0.67917

Residual 16 1036 65

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Coefficient of variation: 28 %

yield Means: 29

Parameters PBIB

.

trt 9

block size 3

block/sqr 3

sqr 4

Efficiency factor 0.75

Comparison test lsd

Treatments with the same letter are not significantly different.

yield.adj groups

1 44 a

9 39 ab

4 39 ab

7 32 bc

6 31 bc

2 26 cd

8 18 d

5 17 d

3 15 d

<<< to see the objects: means, comparison and groups. >>>The adjusted averages can be extracted from the modelLattice.

print(modelLattice$means)

The comparisons:

head(modelLattice$comparison)

Augmented Blocks

The function DAU.test can be used for the analysis of the augmented block design. The data should be organized in a table, containing the blocks, treatments, and the response.

block<-c(rep("I",7),rep("II",6),rep("III",7)) trt<-c("A","B","C","D","g","k","l","A","B","C","D","e","i","A","B", "C", "D","f","h","j") yield<-c(83,77,78,78,70,75,74,79,81,81,91,79,78,92,79,87,81,89,96, 82) head(data.frame(block, trt, yield))

block trt yield

1 I A 83

2 I B 77

3 I C 78

4 I D 78

5 I g 70

6 I k 75The treatments are in each block:

by(trt,block,as.character)

block: I

[1] "A" "B" "C" "D" "g" "k" "l"

------------------------------------------------------------

block: II

[1] "A" "B" "C" "D" "e" "i"

------------------------------------------------------------

block: III

[1] "A" "B" "C" "D" "f" "h" "j"With their respective responses:

by(yield,block,as.character)

block: I

[1] "83" "77" "78" "78" "70" "75" "74"

------------------------------------------------------------

block: II

[1] "79" "81" "81" "91" "79" "78"

------------------------------------------------------------

block: III

[1] "92" "79" "87" "81" "89" "96" "82"Analysis:

modelDAU<- DAU.test(block,trt,yield,method="lsd",console=TRUE)

ANALYSIS DAU: yield

Class level information

Block: I II III

Trt : A B C D e f g h i j k l

Number of observations: 20

ANOVA, Treatment Adjusted

Analysis of Variance Table

Response: yield

Df Sum Sq Mean Sq F value Pr(>F)

block.unadj 2 360 180.0

trt.adj 11 285 25.9 0.96 0.55

Control 3 53 17.6 0.65 0.61

Control + control.VS.aug. 8 232 29.0 1.08 0.48

Residuals 6 162 27.0

ANOVA, Block Adjusted

Analysis of Variance Table

Response: yield

Df Sum Sq Mean Sq F value Pr(>F)

trt.unadj 11 576 52.3

block.adj 2 70 34.8 1.29 0.34

Control 3 53 17.6 0.65 0.61

Augmented 7 506 72.3 2.68 0.13

Control vs augmented 1 17 16.9 0.63 0.46

Residuals 6 162 27.0

coefficient of variation: 6.4 %

yield Means: 82

Critical Differences (Between)

Std Error Diff.

Two Control Treatments 4.2

Two Augmented Treatments (Same Block) 7.3

Two Augmented Treatments(Different Blocks) 8.2

A Augmented Treatment and A Control Treatment 6.4

Treatments with the same letter are not significantly different.

yield groups

h 94 a

f 86 ab

A 85 ab

D 83 ab

C 82 ab

j 80 ab

B 79 ab

e 78 ab

k 78 ab

i 77 ab

l 77 ab

g 73 b

Comparison between treatments means

<<< to see the objects: comparison and means >>>options(digits = 2) modelDAU$means

yield std r Min Max Q25 Q50 Q75 mean.adj SE block

A 85 6.7 3 79 92 81 83 88 85 3.0

B 79 2.0 3 77 81 78 79 80 79 3.0

C 82 4.6 3 78 87 80 81 84 82 3.0

D 83 6.8 3 78 91 80 81 86 83 3.0

e 79 NA 1 79 79 79 79 79 78 5.2 II

f 89 NA 1 89 89 89 89 89 86 5.2 III

g 70 NA 1 70 70 70 70 70 73 5.2 I

h 96 NA 1 96 96 96 96 96 94 5.2 III

i 78 NA 1 78 78 78 78 78 77 5.2 II

j 82 NA 1 82 82 82 82 82 80 5.2 III

k 75 NA 1 75 75 75 75 75 78 5.2 I

l 74 NA 1 74 74 74 74 74 77 5.2 ImodelDAU<- DAU.test(block,trt,yield,method="lsd",group=FALSE,console=FALSE) head(modelDAU$comparison,8)

Difference pvalue sig.

A - B 5.7 0.23

A - C 2.7 0.55

A - D 1.3 0.76

A - e 6.4 0.35

A - f -1.8 0.78

A - g 11.4 0.12

A - h -8.8 0.21

A - i 7.4 0.29 Non-parametric Comparisons

The functions for non-parametric multiple comparisons included in agricolae are: kruskal, waerden.test, friedman and durbin.test (Conover, 1999).

The post hoc nonparametrics tests (kruskal, friedman, durbin and waerden) are using the criterium Fisher’s least significant difference (LSD).

The function kruskal is used for N samples (N>2), populations or data coming from a completely random experiment (populations = treatments).

The function waerden.test, similar to kruskal-wallis, uses a normal score instead of ranges as kruskal does.

The function friedman is used for organoleptic evaluations of different products, made by judges (every judge evaluates all the products). It can also be used for the analysis of treatments of the randomized complete block design, where the response cannot be treated through the analysis of variance.

The function durbin.test for the analysis of balanced incomplete block designs is very used for sampling tests, where the judges only evaluate a part of the treatments.

The function Median.test for the analysis the distribution is approximate with chi-squared ditribution with degree free number of groups minus one. In each comparison a table of \(2 \times 2\) (pair of groups) and the criterion of greater or lesser value than the median of both are formed, the chi-square test is applied for the calculation of the probability of error that both are independent. This value is compared to the alpha level for group formation.

Montgomery book data (Montgomery, 2002). Included in the agricolae package

'data.frame': 34 obs. of 3 variables:

$ method : int 1 1 1 1 1 1 1 1 1 2 ...

$ observation: int 83 91 94 89 89 96 91 92 90 91 ...

$ rx : num 11 23 28.5 17 17 31.5 23 26 19.5 23 ...For the examples, the agricolae** package data will be used**

Kruskal-Wallis

It makes the multiple comparison with Kruskal-Wallis. The parameters by default are alpha = 0.05.

str(kruskal)

function (y, trt, alpha = 0.05, p.adj = c("none", "holm", "hommel", "hochberg",

"bonferroni", "BH", "BY", "fdr"), group = TRUE, main = NULL, console = FALSE) Analysis

Study: corn

Kruskal-Wallis test's

Ties or no Ties

Critical Value: 26

Degrees of freedom: 3

Pvalue Chisq : 1.1e-05

method, means of the ranks

observation r

1 21.8 9

2 15.3 10

3 29.6 7

4 4.8 8

Post Hoc Analysis

t-Student: 2

Alpha : 0.05

Groups according to probability of treatment differences and alpha level.

Treatments with the same letter are not significantly different.

observation groups

3 29.6 a

1 21.8 b

2 15.3 c

4 4.8 dThe object output has the same structure of the comparisons see the functions plot.group(agricolae), bar.err(agricolae) and bar.group(agricolae).

Kruskal-Wallis: adjust P-values

To see p.adjust.methods()

observation groups

3 29.6 a

1 21.8 b

2 15.3 c

4 4.8 dout<-with(corn,kruskal(observation,method,group=FALSE, main="corn", p.adj="holm")) print(out$comparison)

Difference pvalue Signif.

1 - 2 6.5 0.0079 **

1 - 3 -7.7 0.0079 **

1 - 4 17.0 0.0000 ***

2 - 3 -14.3 0.0000 ***

2 - 4 10.5 0.0003 ***

3 - 4 24.8 0.0000 ***Friedman

The data consist of b mutually independent k-variate random variables called b blocks. The random variable is in a block and is associated with treatment. It makes the multiple comparison of the Friedman test with or without ties. A first result is obtained by friedman.test of R.

str(friedman)

function (judge, trt, evaluation, alpha = 0.05, group = TRUE, main = NULL,

console = FALSE) Analysis

data(grass) out<-with(grass,friedman(judge,trt, evaluation,alpha=0.05, group=FALSE, main="Data of the book of Conover",console=TRUE))

Study: Data of the book of Conover

trt, Sum of the ranks

evaluation r

t1 38 12

t2 24 12

t3 24 12

t4 34 12

Friedman's Test

===============

Adjusted for ties

Critical Value: 8.1

P.Value Chisq: 0.044

F Value: 3.2

P.Value F: 0.036

Post Hoc Analysis

Comparison between treatments

Sum of the ranks

difference pvalue signif. LCL UCL

t1 - t2 14.5 0.015 * 3.0 25.98

t1 - t3 13.5 0.023 * 2.0 24.98

t1 - t4 4.0 0.483 -7.5 15.48

t2 - t3 -1.0 0.860 -12.5 10.48

t2 - t4 -10.5 0.072 . -22.0 0.98

t3 - t4 -9.5 0.102 -21.0 1.98Waerden

A nonparametric test for several independent samples. Example applied with the sweet potato data in the agricolae basis.

str(waerden.test)

function (y, trt, alpha = 0.05, group = TRUE, main = NULL, console = FALSE) Analysis

data(sweetpotato) outWaerden<-with(sweetpotato,waerden.test(yield,virus,alpha=0.01,group=TRUE,console=TRUE))

Study: yield ~ virus

Van der Waerden (Normal Scores) test's

Value : 8.4

Pvalue: 0.038

Degrees of Freedom: 3

virus, means of the normal score

yield std r

cc -0.23 0.30 3

fc -1.06 0.35 3

ff 0.69 0.76 3

oo 0.60 0.37 3

Post Hoc Analysis

Alpha: 0.01 ; DF Error: 8

Minimum Significant Difference: 1.3

Treatments with the same letter are not significantly different.

Means of the normal score

score groups

ff 0.69 a

oo 0.60 a

cc -0.23 ab

fc -1.06 bThe comparison probabilities are obtained with the parameter group = FALSE.

names(outWaerden)

[1] "statistics" "parameters" "means" "comparison" "groups" To see outWaerden$comparison

out<-with(sweetpotato,waerden.test(yield,virus,group=FALSE,console=TRUE))

Study: yield ~ virus

Van der Waerden (Normal Scores) test's

Value : 8.4

Pvalue: 0.038

Degrees of Freedom: 3

virus, means of the normal score

yield std r

cc -0.23 0.30 3

fc -1.06 0.35 3

ff 0.69 0.76 3

oo 0.60 0.37 3

Post Hoc Analysis

Comparison between treatments

mean of the normal score

difference pvalue signif. LCL UCL

cc - fc 0.827 0.0690 . -0.082 1.736

cc - ff -0.921 0.0476 * -1.830 -0.013

cc - oo -0.837 0.0664 . -1.746 0.072

fc - ff -1.749 0.0022 ** -2.658 -0.840

fc - oo -1.665 0.0029 ** -2.574 -0.756

ff - oo 0.084 0.8363 -0.825 0.993Median test

A nonparametric test for several independent samples. The median test is designed to examine whether several samples came from populations having the same median (Conover, 1999). See also Figure @ref(fig:f14).

In each comparison a table of 2x2 (pair of groups) and the criterion of greater or lesser value than the median of both are formed, the chi-square test is applied for the calculation of the probability of error that both are independent. This value is compared to the alpha level for group formation.

str(Median.test)

function (y, trt, alpha = 0.05, correct = TRUE, simulate.p.value = FALSE,

group = TRUE, main = NULL, console = TRUE) str(Median.test)

function (y, trt, alpha = 0.05, correct = TRUE, simulate.p.value = FALSE,

group = TRUE, main = NULL, console = TRUE) Analysis

data(sweetpotato) outMedian<-with(sweetpotato,Median.test(yield,virus,console=TRUE))

The Median Test for yield ~ virus

Chi Square = 6.7 DF = 3 P.Value 0.083

Median = 28

Median r Min Max Q25 Q75

cc 23 3 22 28 22 26

fc 13 3 11 15 12 14

ff 39 3 28 42 34 40

oo 38 3 32 40 35 39

Post Hoc Analysis

Groups according to probability of treatment differences and alpha level.

Treatments with the same letter are not significantly different.

yield groups

ff 39 a

oo 38 a

cc 23 a

fc 13 bnames(outMedian)

[1] "statistics" "parameters" "medians" "comparison" "groups" outMedian$statistics

Chisq Df p.chisq Median

6.7 3 0.083 28outMedian$medians

Median r Min Max Q25 Q75

cc 23 3 22 28 22 26

fc 13 3 11 15 12 14

ff 39 3 28 42 34 40

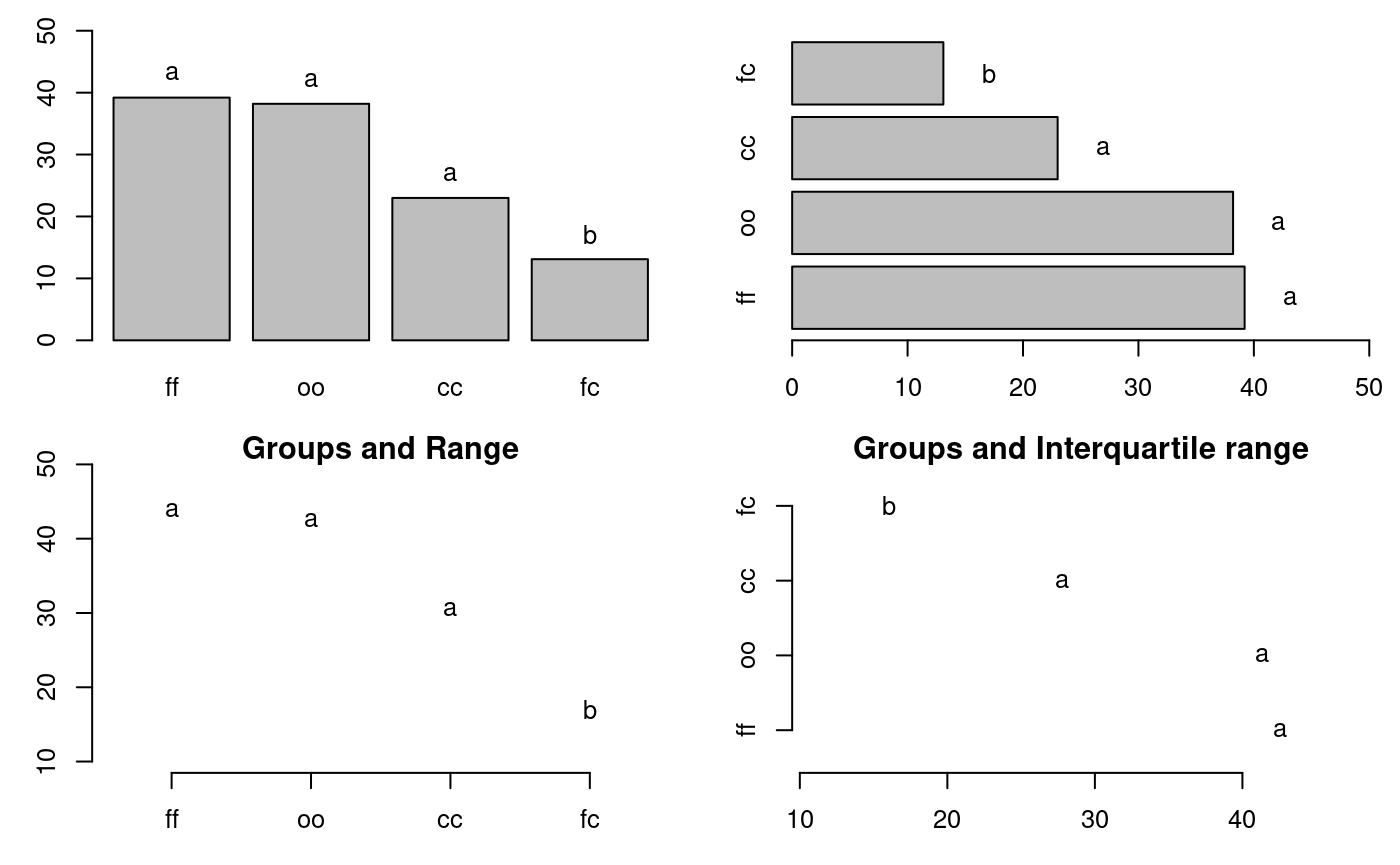

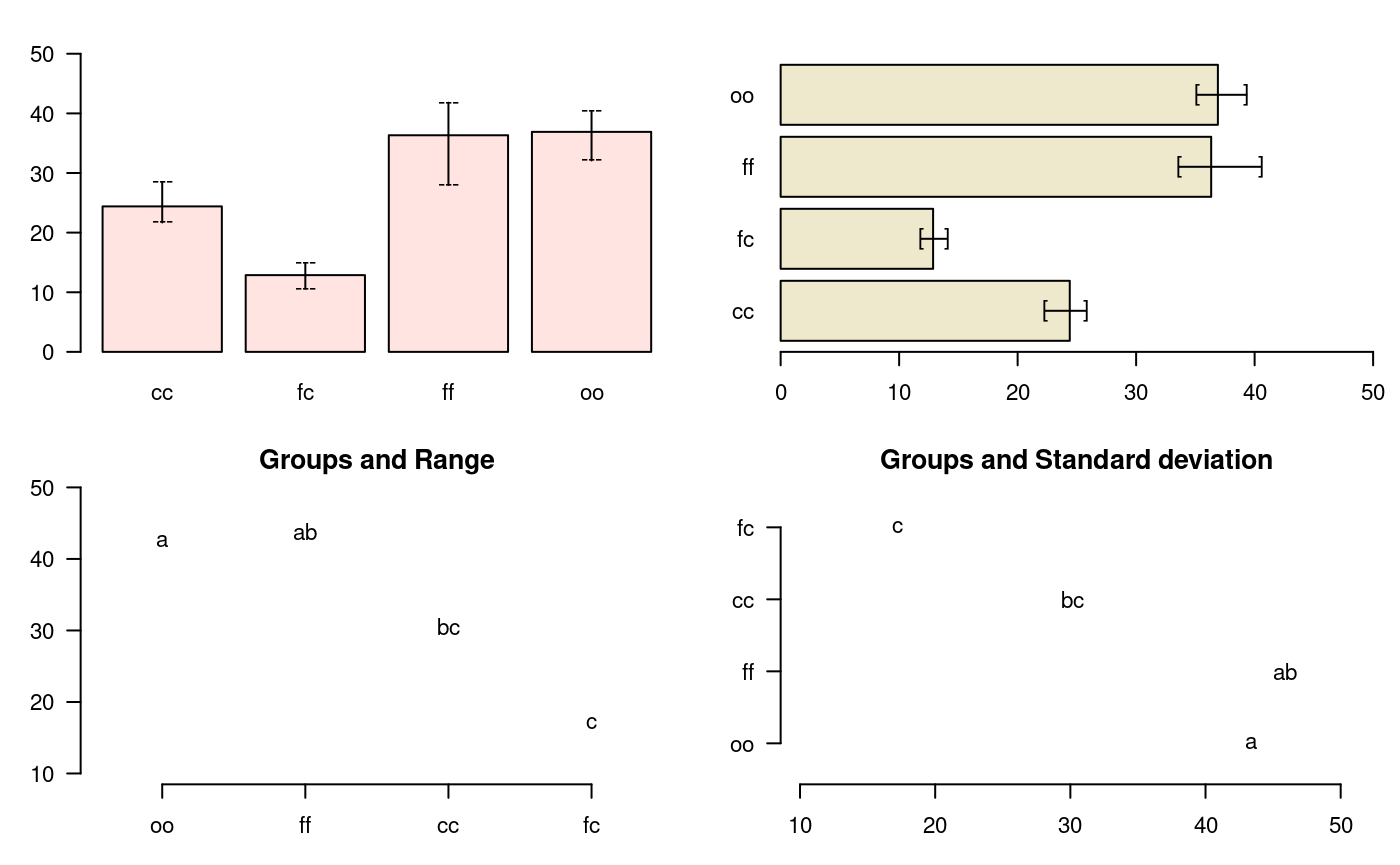

oo 38 3 32 40 35 39oldpar<-par(mfrow=c(2,2),mar=c(3,3,1,1),cex=0.8) # Graphics bar.group(outMedian$groups,ylim=c(0,50)) bar.group(outMedian$groups,xlim=c(0,50),horiz = TRUE) plot(outMedian)

Warning in plot.group(outMedian): NAs introduced by coercionplot(outMedian,variation="IQR",horiz = TRUE)

Warning in plot.group(outMedian, variation = "IQR", horiz = TRUE): NAs

introduced by coercion

Grouping of treatments and its variation, Median method

par(oldpar)

Durbin

durbin.test; example: Myles Hollander (p. 311) Source: W. Moore and C.I. Bliss. (1942) A multiple comparison of the Durbin test for the balanced incomplete blocks for sensorial or categorical evaluation. It forms groups according to the demanded ones for level of significance (alpha); by default, 0.05.

str(durbin.test)

function (judge, trt, evaluation, alpha = 0.05, group = TRUE, main = NULL,

console = FALSE) Analysis

days <-gl(7,3) chemical<-c("A","B","D","A","C","E","C","D","G","A","F","G", "B","C","F", "B","E","G","D","E","F") toxic<-c(0.465,0.343,0.396,0.602,0.873,0.634,0.875,0.325,0.330, 0.423,0.987,0.426, 0.652,1.142,0.989,0.536,0.409,0.309, 0.609,0.417,0.931) head(data.frame(days,chemical,toxic))

days chemical toxic

1 1 A 0.47

2 1 B 0.34

3 1 D 0.40

4 2 A 0.60

5 2 C 0.87

6 2 E 0.63out<-durbin.test(days,chemical,toxic,group=FALSE,console=TRUE, main="Logarithm of the toxic dose")

Study: Logarithm of the toxic dose

chemical, Sum of ranks

sum

A 5

B 5

C 9

D 5

E 5

F 8

G 5

Durbin Test

===========

Value : 7.7

DF 1 : 6

P-value : 0.26

Alpha : 0.05

DF 2 : 8

t-Student : 2.3

Least Significant Difference

between the sum of ranks: 5

Parameters BIB

Lambda : 1

Treatmeans : 7

Block size : 3

Blocks : 7

Replication: 3

Comparison between treatments

Sum of the ranks

difference pvalue signif.

A - B 0 1.00

A - C -4 0.10

A - D 0 1.00

A - E 0 1.00

A - F -3 0.20

A - G 0 1.00

B - C -4 0.10

B - D 0 1.00

B - E 0 1.00

B - F -3 0.20

B - G 0 1.00

C - D 4 0.10

C - E 4 0.10

C - F 1 0.66

C - G 4 0.10

D - E 0 1.00

D - F -3 0.20

D - G 0 1.00

E - F -3 0.20

E - G 0 1.00

F - G 3 0.20 names(out)

[1] "statistics" "parameters" "means" "rank" "comparison"

[6] "groups" out$statistics

chisq.value p.value t.value LSD

7.7 0.26 2.3 5Graphics of the multiple comparison

The results of a comparison can be graphically seen with the functions bar.group, bar.err and diffograph.

bar.group

A function to plot horizontal or vertical bar, where the letters of groups of treatments is expressed. The function applies to all functions comparison treatments. Each object must use the group object previously generated by comparative function in indicating that group = TRUE.

Example

# model <-aov (yield ~ fertilizer, data = field) # out <-LSD.test (model, "fertilizer", group = TRUE) # bar.group (out$group) str(bar.group)

function (x, horiz = FALSE, ...) The Median test with option group=TRUE (default) is used in the exercise.

bar.err

A function to plot horizontal or vertical bar, where the variation of the error is expressed in every treatments. The function applies to all functions comparison treatments. Each object must use the means object previously generated by the comparison function, see Figure @ref(fig:f4)

# model <-aov (yield ~ fertilizer, data = field) # out <-LSD.test (model, "fertilizer", group = TRUE) # bar.err(out$means) str(bar.err)

function (x, variation = c("SE", "SD", "range", "IQR"), horiz = FALSE,

bar = TRUE, ...) variation

SE: Standard error

SD: standard deviation

range: max-min

oldpar<-par(mfrow=c(2,2),mar=c(3,3,2,1),cex=0.7) c1<-colors()[480]; c2=colors()[65] data(sweetpotato) model<-aov(yield~virus, data=sweetpotato) outHSD<- HSD.test(model, "virus",console=TRUE)

Study: model ~ "virus"

HSD Test for yield

Mean Square Error: 22

virus, means

yield std r Min Max

cc 24 3.6 3 22 28

fc 13 2.2 3 11 15

ff 36 7.3 3 28 42

oo 37 4.3 3 32 40

Alpha: 0.05 ; DF Error: 8

Critical Value of Studentized Range: 4.5

Minimun Significant Difference: 12

Treatments with the same letter are not significantly different.

yield groups

oo 37 a

ff 36 ab

cc 24 bc

fc 13 cbar.err(outHSD$means, variation="range",ylim=c(0,50),col=c1,las=1) bar.err(outHSD$means, variation="IQR",horiz=TRUE, xlim=c(0,50),col=c2,las=1) plot(outHSD, variation="range",las=1)

Warning in plot.group(outHSD, variation = "range", las = 1): NAs introduced by

coercionplot(outHSD, horiz=TRUE, variation="SD",las=1)

Warning in plot.group(outHSD, horiz = TRUE, variation = "SD", las = 1): NAs

introduced by coercion

Comparison between treatments

par(oldpar)

oldpar<-par(mfrow=c(2,2),cex=0.7,mar=c(3.5,1.5,3,1)) C1<-bar.err(modelPBIB$means[1:7, ], ylim=c(0,9), col=0, main="C1", variation="range",border=3,las=2) C2<-bar.err(modelPBIB$means[8:15,], ylim=c(0,9), col=0, main="C2", variation="range", border =4,las=2) # Others graphic C3<-bar.err(modelPBIB$means[16:22,], ylim=c(0,9), col=0, main="C3", variation="range",border =2,las=2) C4<-bar.err(modelPBIB$means[23:30,], ylim=c(0,9), col=0, main="C4", variation="range", border =6,las=2) # Lattice graphics par(oldpar) oldpar<-par(mar=c(2.5,2.5,1,0),cex=0.6) bar.group(modelLattice$group,ylim=c(0,55),density=10,las=1) par(oldpar)

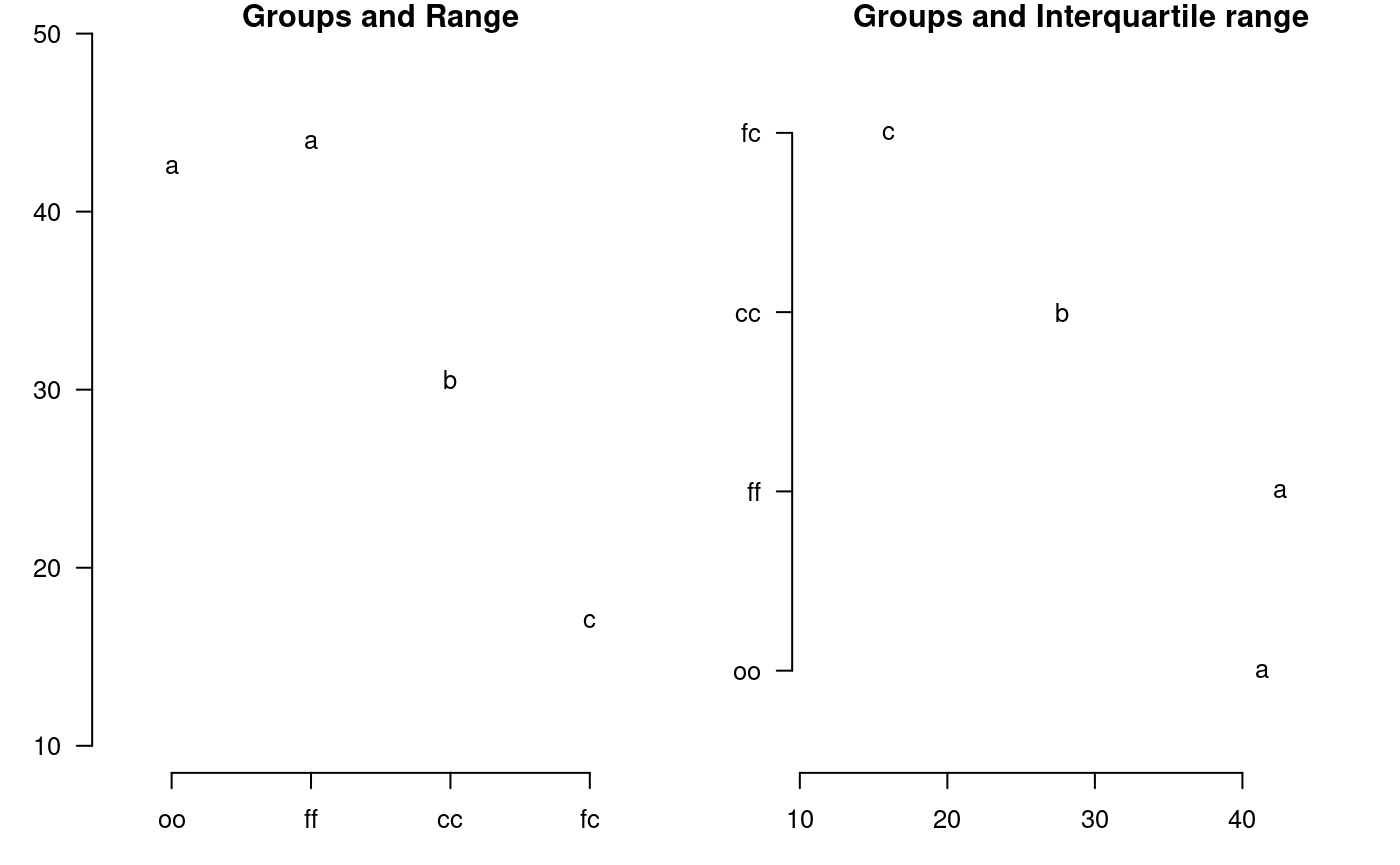

plot.group

It plot groups and variation of the treatments to compare. It uses the objects generated by a procedure of comparison like LSD (Fisher), duncan, Tukey (HSD), Student Newman Keul (SNK), Scheffe, Waller-Duncan, Ryan, Einot and Gabriel and Welsch (REGW), Kruskal Wallis, Friedman, Median, Waerden and other tests like Durbin, DAU, BIB, PBIB. The variation types are range (maximun and minimun), IQR (interquartile range), SD (standard deviation) and SE (standard error), see Figure @ref(fig:f13).

The function: plot.group() and their arguments are x (output of test), variation = c("range", "IQR", "SE", "SD"), horiz (TRUE or FALSE), xlim, ylim and main are optional plot() parameters and others plot parameters.

# model : yield ~ virus # Important group=TRUE oldpar<-par(mfrow=c(1,2),mar=c(3,3,1,1),cex=0.8) x<-duncan.test(model, "virus", group=TRUE) plot(x,las=1)

Warning in plot.group(x, las = 1): NAs introduced by coercionplot(x,variation="IQR",horiz=TRUE,las=1)

Warning in plot.group(x, variation = "IQR", horiz = TRUE, las = 1): NAs

introduced by coercion

Grouping of treatments and its variation, Duncan method

par(oldpar)

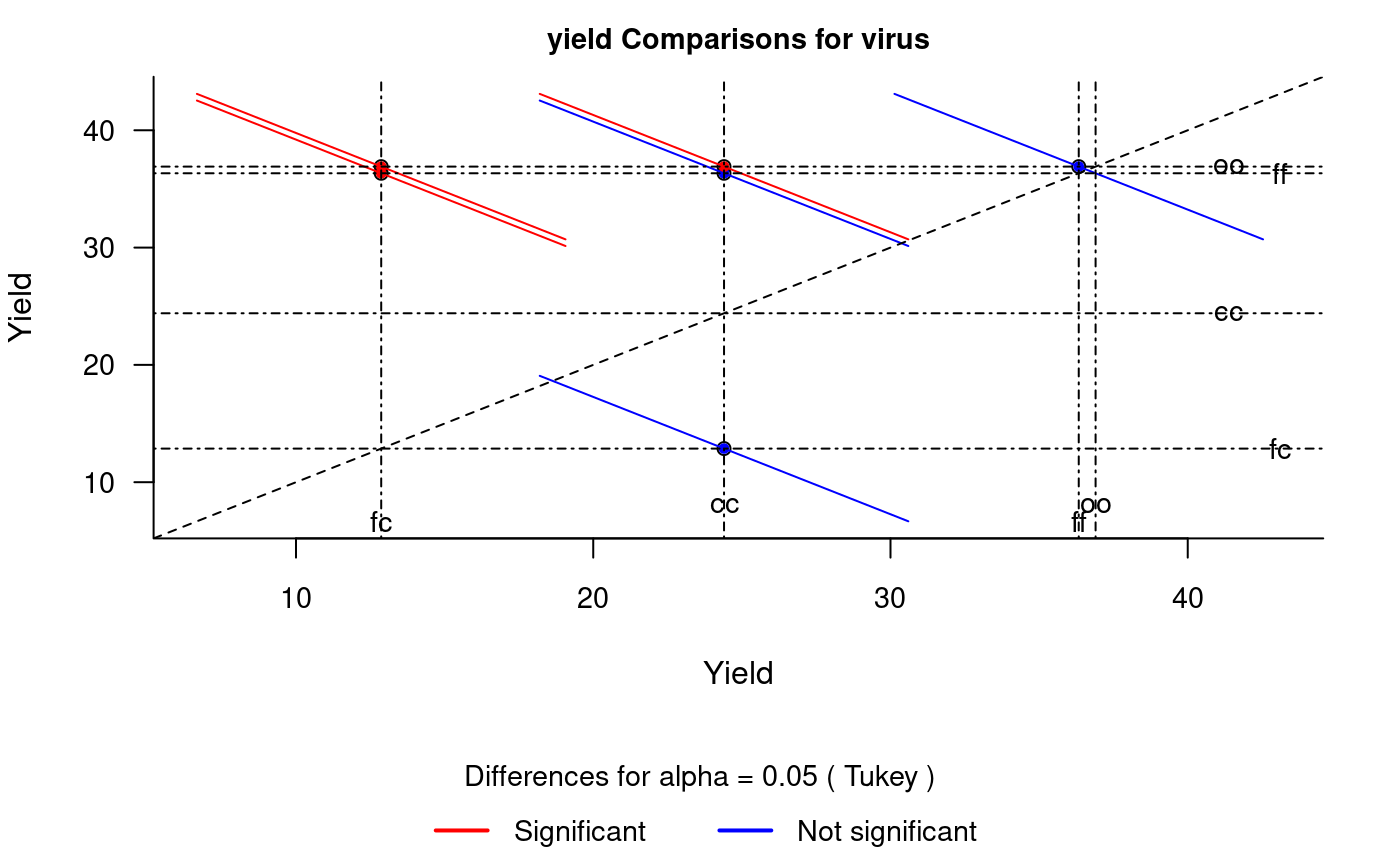

diffograph

It plots bars of the averages of treatments to compare. It uses the objects generated by a procedure of comparison like LSD (Fisher), duncan, Tukey (HSD), Student Newman Keul (SNK), Scheffe, Ryan, Einot and Gabriel and Welsch (REGW), Kruskal Wallis, Friedman and Waerden (Hsu, 1996) see Figure @ref(fig:f5).

# function (x, main = NULL, color1 = "red", color2 = "blue", # color3 = "black", cex.axis = 0.8, las = 1, pch = 20, # bty = "l", cex = 0.8, lwd = 1, xlab = "", ylab = "", # ...) # model : yield ~ virus # Important group=FALSE x<-HSD.test(model, "virus", group=FALSE) diffograph(x,cex.axis=0.9,xlab="Yield",ylab="Yield",cex=0.9)

Mean-Mean scatter plot representation of the Tukey method

Stability Analysis

In agricolae there are two methods for the study of stability and the AMMI model. These are: a parametric model for a simultaneous selection in yield and stability “SHUKLA’S STABILITY VARIANCE AND KANG’S”, (Kang, 1993) and a non-parametric method of Haynes, based on the data range.

Parametric Stability

Use the parametric model, function stability.par.

Prepare a data table where the rows and the columns are the genotypes and the environments, respectively. The data should correspond to yield averages or to another measured variable. Determine the variance of the common error for all the environments and the number of repetitions that was evaluated for every genotype. If the repetitions are different, find a harmonious average that will represent the set. Finally, assign a name to each row that will represent the genotype (Kang, 1993). We will consider five environments in the following example:

options(digit=2) f <- system.file("external/dataStb.csv", package="agricolae") dataStb<-read.csv(f) stability.par(dataStb, rep=4, MSerror=1.8, alpha=0.1, main="Genotype",console=TRUE)

INTERACTIVE PROGRAM FOR CALCULATING SHUKLA'S STABILITY VARIANCE AND KANG'S

YIELD - STABILITY (YSi) STATISTICS

Genotype

Environmental index - covariate

Analysis of Variance

Df Sum Sq Mean Sq F value Pr(>F)

Total 203 2964.1716

Genotypes 16 186.9082 11.6818 4.17 <0.001

Environments 11 2284.0116 207.6374 115.35 <0.001

Interaction 176 493.2518 2.8026 1.56 <0.001

Heterogeneity 16 44.8576 2.8036 1 0.459

Residual 160 448.3942 2.8025 1.56 <0.001

Pooled Error 576 1.8

Genotype. Stability statistics

Mean Sigma-square . s-square . Ecovalence

A 7.4 2.47 ns 2.45 ns 25.8

B 6.8 1.60 ns 1.43 ns 17.4

C 7.2 0.57 ns 0.63 ns 7.3

D 6.8 2.61 ns 2.13 ns 27.2

E 7.1 1.86 ns 2.05 ns 19.9

F 6.9 3.58 * 3.95 * 36.5

G 7.8 3.58 * 3.96 * 36.6

H 7.9 2.72 ns 2.12 ns 28.2

I 7.3 4.25 ** 3.94 * 43.0

J 7.1 2.27 ns 2.51 ns 23.9

K 6.4 2.56 ns 2.55 ns 26.7

L 6.9 1.56 ns 1.73 ns 16.9

M 6.8 3.48 * 3.28 ns 35.6

N 7.5 5.16 ** 4.88 ** 51.9

O 7.7 2.38 ns 2.64 ns 24.9

P 6.4 3.45 * 3.71 * 35.3

Q 6.2 3.53 * 3.69 * 36.1

Signif. codes: 0 '**' 0.01 '*' 0.05 'ns' 1

Simultaneous selection for yield and stability (++)

Yield Rank Adj.rank Adjusted Stab.var Stab.rating YSi ...

A 7.4 13 1 14 2.47 0 14 +

B 6.8 4 -1 3 1.60 0 3

C 7.2 11 1 12 0.57 0 12 +

D 6.8 4 -1 3 2.61 0 3

E 7.1 9 1 10 1.86 0 10 +

F 6.9 8 -1 7 3.58 -4 3

G 7.8 16 2 18 3.58 -4 14 +

H 7.9 17 2 19 2.72 0 19 +

I 7.3 12 1 13 4.25 -8 5

J 7.1 10 1 11 2.27 0 11 +

K 6.4 3 -2 1 2.56 0 1

L 6.9 7 -1 6 1.56 0 6

M 6.8 6 -1 5 3.48 -4 1

N 7.5 14 1 15 5.16 -8 7 +

O 7.7 15 2 17 2.38 0 17 +

P 6.4 2 -2 0 3.45 -4 -4

Q 6.2 1 -3 -2 3.53 -4 -6

Yield Mean: 7.1

YS Mean: 6.8

LSD (0.05): 0.45

- - - - - - - - - - -

+ selected genotype

++ Reference: Kang, M. S. 1993. Simultaneous selection for yield

and stability: Consequences for growers. Agron. J. 85:754-757.For 17 genotypes, the identification is made by letters. An error variance of 2 and 4 repetitions is assumed.

Analysis

output <- stability.par(dataStb, rep=4, MSerror=2) names(output)

[1] "analysis" "statistics" "stability" print(output$stability)

Yield Rank Adj.rank Adjusted Stab.var Stab.rating YSi ...

A 7.4 13 1 14 2.47 0 14 +

B 6.8 4 -1 3 1.60 0 3

C 7.2 11 1 12 0.57 0 12 +

D 6.8 4 -1 3 2.61 0 3

E 7.1 9 1 10 1.86 0 10 +

F 6.9 8 -1 7 3.58 -2 5

G 7.8 16 2 18 3.58 -2 16 +

H 7.9 17 2 19 2.72 0 19 +

I 7.3 12 1 13 4.25 -4 9 +

J 7.1 10 1 11 2.27 0 11 +

K 6.4 3 -2 1 2.56 0 1

L 6.9 7 -1 6 1.56 0 6

M 6.8 6 -1 5 3.48 -2 3

N 7.5 14 1 15 5.16 -8 7

O 7.7 15 2 17 2.38 0 17 +

P 6.4 2 -2 0 3.45 -2 -2

Q 6.2 1 -2 -1 3.53 -2 -3 The selected genotypes are: A, C, E, G, H, I, J and O. These genotypes have a higher yield and a lower variation. to see output$analysis, the interaction is significant.

If for example there is an environmental index, it can be added as a covariate In the first five locations. For this case, the altitude of the localities is included.

data5<-dataStb[,1:5] altitude<-c(1200, 1300, 800, 1600, 2400) stability <- stability.par(data5,rep=4,MSerror=2, cova=TRUE, name.cov= "altitude", file.cov=altitude)

Non-parametric Stability

For non-parametric stability, the function in agricolae is stability.nonpar(). The names of the genotypes should be included in the first column, and in the other columns, the response by environments (Haynes et al., 1998).

Analysis

data <- data.frame(name=row.names(dataStb), dataStb) output<-stability.nonpar(data, "YIELD", ranking=TRUE) names(output)

[1] "ranking" "statistics"output$statistics

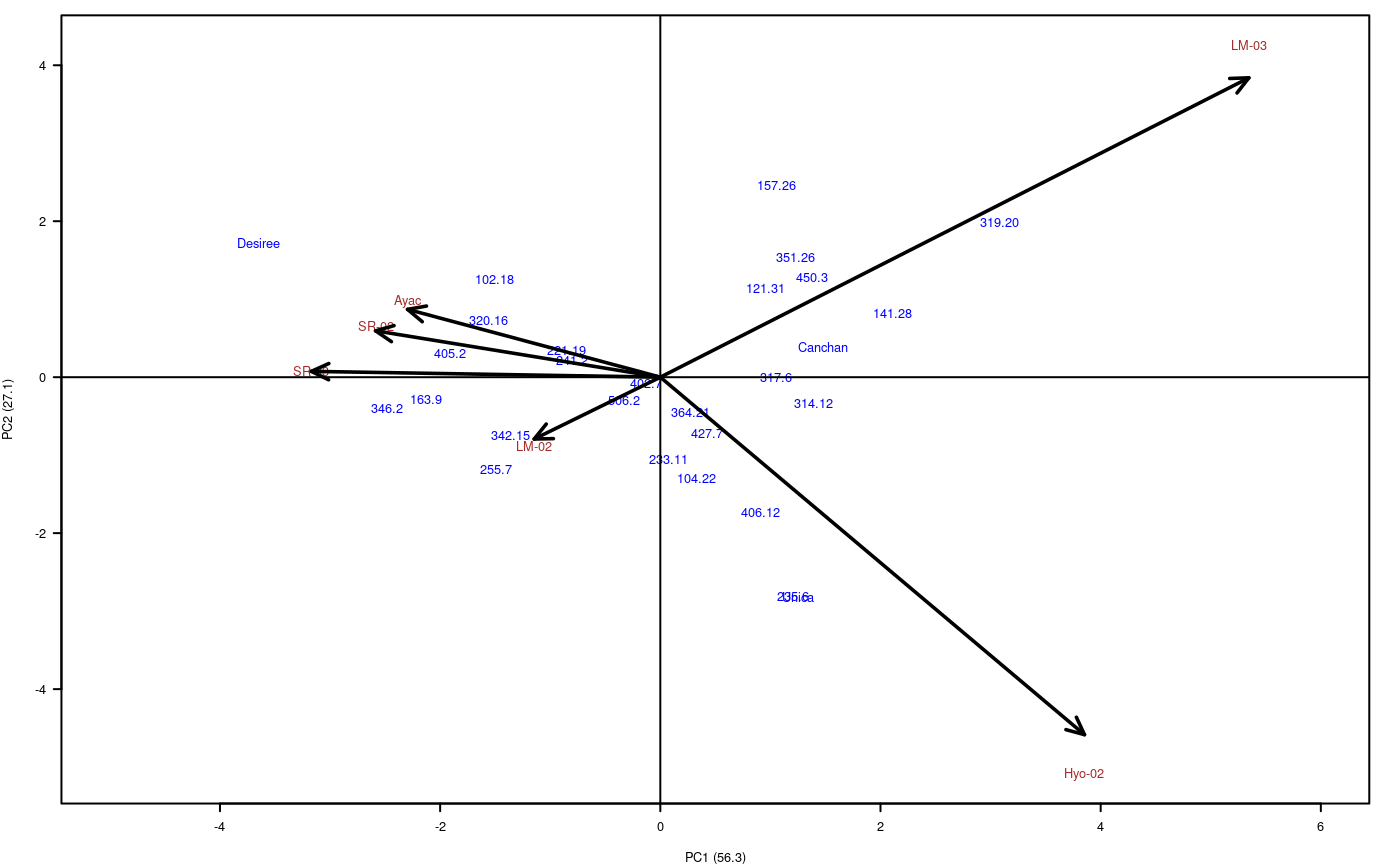

MEAN es1 es2 vs1 vs2 chi.ind chi.sum

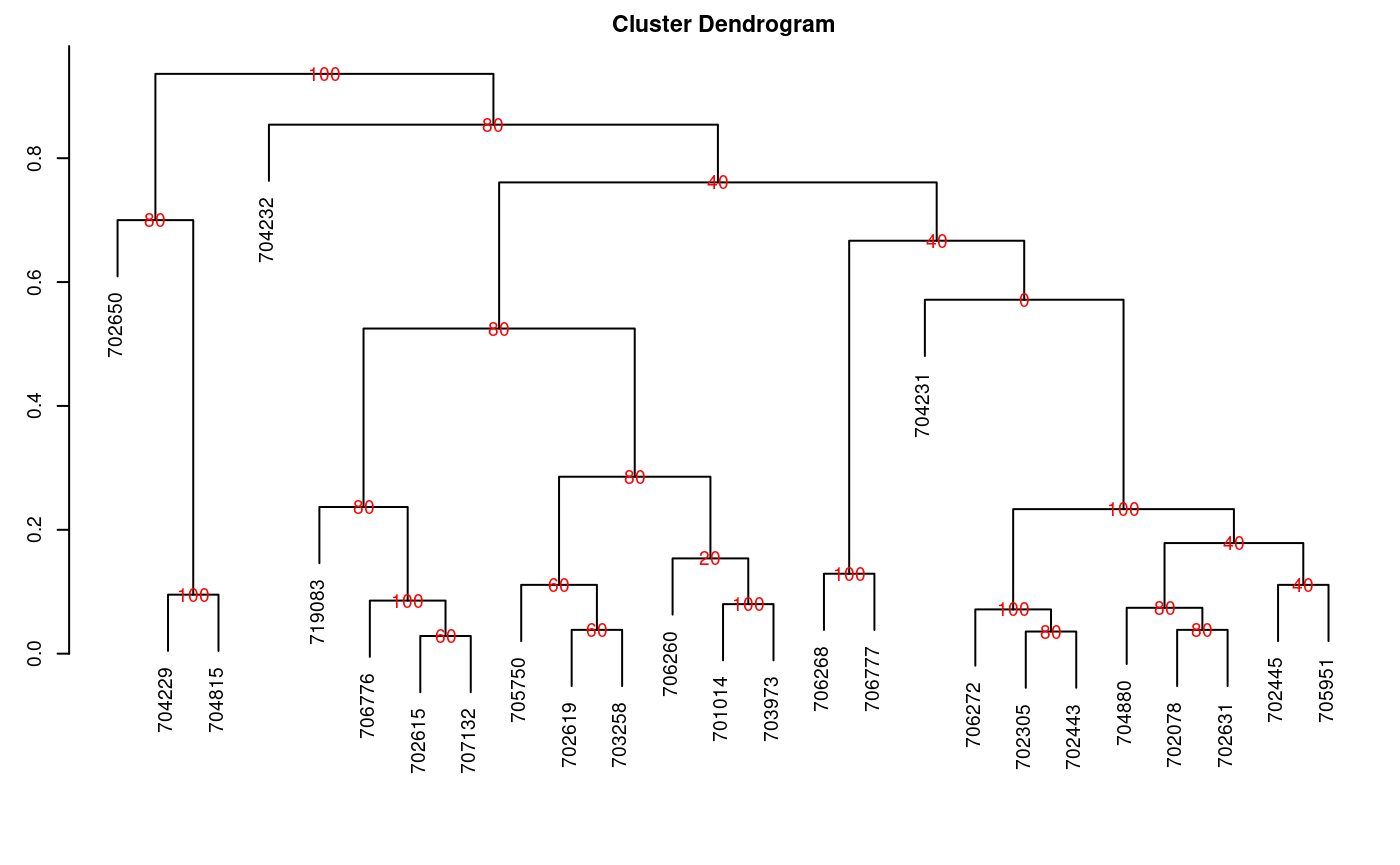

1 7.1 5.6 24 0.72 47 8.8 28AMMI